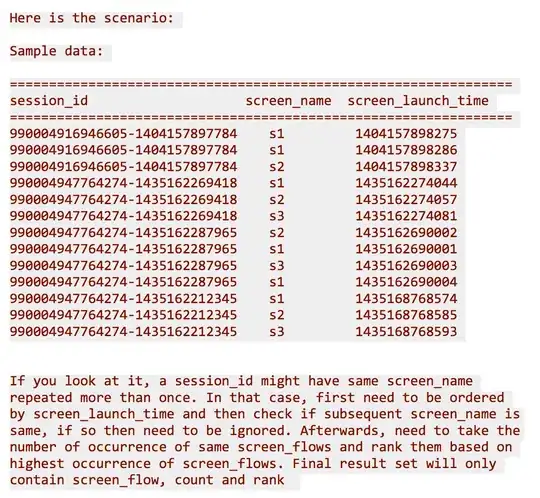

Below is the example for my use case.

-

Ok.. I got the requirement.. Tried for some time to get the actual output.. looks like we need a java UDF to remove adjacent same screen names.. let me think for some more time on this. I would like to give a solution without UDF – Surender Raja Sep 20 '15 at 18:45

-

@explorethis : Is it possible to add the input and expected output in plain text format ? I like to try this use case. – Murali Rao Sep 21 '15 at 06:32

-

@MuraliRao : I couldn't format in the same order. It puts as a plain text. If you can share your email, I will send in a word doc for this :) – explorethis Sep 21 '15 at 12:31

-

@Surender Raja - Can you give me suggestion on below scenario http://stackoverflow.com/questions/32768964/mobile-application-churn-rate-calculation-using-pig-or-hive – explorethis Sep 25 '15 at 14:14

2 Answers

You can reference this question where an OP was asking something similar. If I am understanding your problem correctly, you want to remove duplicates from the path, but only when they occur next to each other. So 1 -> 1 -> 2 -> 1 would become 1 -> 2 -> 1. If this is correct, then you can't just group and distinct (as I'm sure you have noticed) because it will remove all duplicates. An easy solution is to write a UDF to remove those duplicates while preserving the distinct path of the user.

UDF:

package something;

import java.util.ArrayList;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.Text;

public class RemoveSequentialDuplicatesUDF extends UDF {

public ArrayList<Text> evaluate(ArrayList<Text> arr) {

ArrayList<Text> newList = new ArrayList<Text>();

newList.add(arr.get(0));

for (int i = 1; i < arr.size(); i++) {

String front = arr.get(i).toString();

String back = arr.get(i-1).toString();

if (!back.equals(front)) {

newList.add(arr.get(i));

}

}

return newList;

}

}

To build this jar you will need a hive-core.jar and hadoop-core.jar, you can find these here in the Maven Repository. Make sure you get the version of Hive and Hadoop that you are using in your environment. Also, if you plan to run this in a production environment, I'd suggest adding some exception handling to the UDF. After the jar is built, import it and run this query:

Query:

add jar /path/to/jars/brickhouse-0.7.1.jar;

add jar /path/to/jars/hive_common-SNAPSHOT.jar;

create temporary function collect as "brickhouse.udf.collect.CollectUDAF";

create temporary function remove_dups as "something.RemoveSequentialDuplicatesUDF";

select screen_flow, count

, dense_rank() over (order by count desc) rank

from (

select screen_flow

, count(*) count

from (

select session_id

, concat_ws("->", remove_dups(screen_array)) screen_flow

from (

select session_id

, collect(screen_name) screen_array

from (

select *

from database.table

order by screen_launch_time ) a

group by session_id ) b

) c

group by screen_flow ) d

Output:

s1->s2->s3 2 1

s1->s2 1 2

s1->s2->s3->s1 1 2

Hope this helps.

-

Geat!! I'm going to try this and will let you know :) With what I see, I understood your approach and hopefully will solve my problem. – explorethis Sep 21 '15 at 17:21

-

-

I need your expertise to solve the problem posted in http://stackoverflow.com/questions/32768964/mobile-application-churn-rate-calculation-using-pig-or-hive – explorethis Sep 24 '15 at 19:03

-

any thoughts on my new post in http://stackoverflow.com/questions/32768964/mobile-application-churn-rate-calculation-using-pig-or-hive – explorethis Sep 25 '15 at 14:15

-

-

@Surender Raja - Will you be able to help me with a pig script for the above hive query written by GoBrewer pls? – explorethis Sep 29 '15 at 03:00

-

any thoughts on how we can approach for question in link:- http://stackoverflow.com/questions/32768964/mobile-application-churn-rate-calculation-using-pig-or-hive – explorethis Oct 02 '15 at 03:14

-

I already upvote for your post. You should see your points. Is there something I'm missing here ? – explorethis Oct 02 '15 at 14:07

Input

990004916946605-1404157897784,S1,1404157898275

990004916946605-1404157897784,S1,1404157898286

990004916946605-1404157897784,S2,1404157898337

990004947764274-1435162269418,S1,1435162274044

990004947764274-1435162269418,S2,1435162274057

990004947764274-1435162269418,S3,1435162274081

990004947764274-1435162287965,S2,1435162690002

990004947764274-1435162287965,S1,1435162690001

990004947764274-1435162287965,S3,1435162690003

990004947764274-1435162287965,S1,1435162690004

990004947764274-1435162212345,S1,1435168768574

990004947764274-1435162212345,S2,1435168768585

990004947764274-1435162212345,S3,1435168768593

register /home/cloudera/jar/ScreenFilter.jar;

screen_records = LOAD '/user/cloudera/inputfiles/screen.txt' USING PigStorage(',') AS(session_id:chararray,screen_name:chararray,launch_time:long);

screen_rec_order = ORDER screen_records by launch_time ASC;

session_grped = GROUP screen_rec_order BY session_id;

eached = FOREACH session_grped

{

ordered = ORDER screen_rec_order by launch_time;

GENERATE group as session_id, REPLACE(BagToString(ordered.screen_name),'_','-->') as screen_str;

};

screen_each = FOREACH eached GENERATE session_id, GetOrderedScreen(screen_str) as screen_pattern;

screen_grp = GROUP screen_each by screen_pattern;

screen_final_each = FOREACH screen_grp GENERATE group as screen_pattern, COUNT(screen_each) as pattern_cnt;

ranker = RANK screen_final_each BY pattern_cnt DESC DENSE;

output_data = FOREACH ranker GENERATE screen_pattern, pattern_cnt, $0 as rank_value;

dump output_data;

I am not able to find a way to use Pig Builtin function to remove adjacent screens for a same session_id ,hence I have used JAVA UDF inorder to remove the adjacent screen names.

I created a JAVA UDF called GetOrderedScreen and covrerted that UDF in to jar and named that jar as ScreenFilter.jar and registered that jar in this Pig Script

Below is the Code for that GetOrderedScreen Java UDF

public class GetOrderedScreen extends EvalFunc<String> {

@Override

public String exec(Tuple input) throws IOException {

String incoming_screen_str= (String)input.get(0);

String outgoing_screen_str ="";

String screen_array[] =incoming_screen_str.split("-->");

String full_screen=screen_array[0];

for (int i=0; i<screen_array.length;i++)

{

String prefix_screen= screen_array[i];

String suffix_screen="";

int j=i+1;

if(j< screen_array.length)

{

suffix_screen = screen_array[j];

}

if (!prefix_screen.equalsIgnoreCase(suffix_screen))

{

full_screen = full_screen+ "-->" +suffix_screen;

}

}

outgoing_screen_str =full_screen.substring(0, full_screen.lastIndexOf("-->"));

return outgoing_screen_str;

}

}

Output

(S1-->S2-->S3,2,1)

(S1-->S2,1,2)

(S1-->S2-->S3-->S1,1,2)

Hope this helps you!.. Also Wait for some more time, Some good brains who see this Question will answer effectively(without JAVA UDF)

- 3,553

- 8

- 44

- 80

-

-

One small question..Similar like rank, I need levels too..how to find the levels from above output? for e.g. (S1-->S2-->S3 is level 2) (S1-->S2 is level 1) (S1-->S2-->S3-->S1 is level 3)..Basically counting the number of arrows (-->) .. Can you help with this? – explorethis Sep 29 '15 at 18:57

-

I wrote a python script to count the substring to achieve the results. Appreciate your help! – explorethis Sep 30 '15 at 13:57

-

Im now trying to add in two new columns application_name and date to the above pig script but facing troubles doing it. Can you help with this? – explorethis Sep 30 '15 at 16:14

-

What is your application name and date.. Do you want to keep it same for all output records – Surender Raja Sep 30 '15 at 17:53

-

Application name varies ( app1 , app2 etc) and date field to know what day the particular path is for – explorethis Sep 30 '15 at 17:55

-

-

-

-

I need to iterate the above sample pig script over all date combinations by hardcoding start date as a particular date and end date will be system date. Do you know if this can be achieved thru shell scripting? – explorethis Oct 05 '15 at 20:15