Thanks for sharing a little more about what your data look like.

Let's use the publicly available crime stats data from the Houston Police Department as an example. In this case, we're using the data set for the month of January, 2015.

library(ggplot2)

crime <- gdata::read.xls('http://www.houstontx.gov/police/cs/xls/jan15.xls')

# There's a single case in there where the offense type is called '1',

# that doesn't make sense to us so we'll remove it.

crime <- crime[!crime$Offense.Type == '1', ]

crime$Offense.Type <- droplevels(crime$Offense.Type)

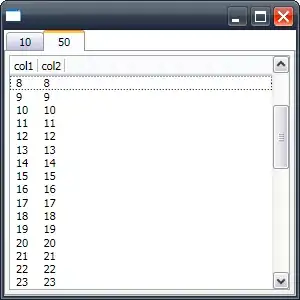

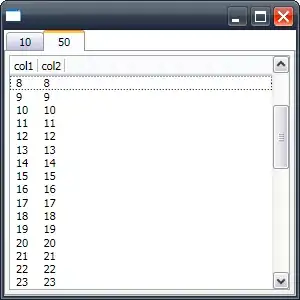

There are 10 columns, but the ones we're

interested in look like this:

# Hour Offense.Type

# 8 Auto Theft

# 13 Theft

# 5 Auto Theft

# 13 Theft

# 18 Theft

# 18 Theft

As you mentioned, the problem is that each row is a single incident. We need a way to get frequencies on a per hour basis to pass to geom_area().

The first way is to let ggplot2 handle it, no need to preformat the data.

p <- ggplot(crime, aes(x=Hour, fill=Offense.Type))

p + geom_area(aes(y = ..count..), stat='density')

The other way is to preformat the frequencies table, using R's table() and reshape2's melt():

library(reshape2)

crime.counts <- table(crime$Hour, crime$Offense.Type)

crime.counts.l <- melt(crime.counts,

id.vars = c('Hour'),

value.name = "NumberofCrimes")

names(crime.counts.l) <- c("Hour", "Offense.Type", "numberOfCrimes")

p <- ggplot(crime.counts.l, aes(x = Hour,

y = numberOfCrimes,

fill = Offense.Type))

p + geom_area()