I am using the Stanford CoreNLP to parse sentences. I am trying to create lexicalized grammar with sentiment and other language annotations.

I am getting a parse + sentiment + dep using:

Properties props = new Properties();

props.setProperty("annotators", "tokenize, ssplit, pos,

lemma, ner, parse, depparse, sentiment");

StanfordCoreNLP pipeline = new StanfordCoreNLP(props);

Annotation annotation = pipeline.process(mySentence);

Since I would like to use also dependencies in my grammar, I've been using GrammaticalStructureFactory and its newGrammaticalStructure() method to get a grammatical structure instance. I noticed that the 'TreeGraphNode' has a 'headWordNode' and thus, I traverse both trees in synchronization, e.g.

public void BuildSomething(Tree tree, TreeGraphNode gsNode) {

List<Tree> gsNodes = gsNode.getChildrenAsList();

List<Tree> constNodes = tree.getChildrenAsList();

for (int i = 0; i < constNodes.size(); i++) {

Tree childTree = constNodes.get(i);

TreeGraphNode childGsNode = (TreeGraphNode) gsNodes.get(i);

// build for this "sub-tree"

BuildSomething(childTree, childGsNode);

}

String headWord = gsNode.headWordNode().label().value();

// do something with the head-word...

// do other stuff...

}

And I call this function with the tree annotation and the grammatical structure root:

for (CoreMap sentence : annotation.get(CoreAnnotations.SentencesAnnotation.class)) {

Tree tree = sentence.get(SentimentCoreAnnotations.SentimentAnnotatedTree.class);

GrammaticalStructure gs = gsf.newGrammaticalStructure(tree);

BuildSomething(tree, gs.root);

}

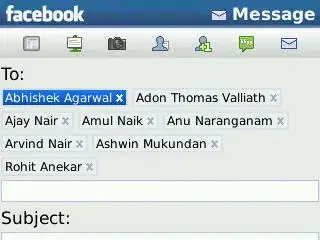

Using something like the above code, I notice that the GrammaticalStructure I am getting does not necessarily matches the structure of the Tree (should it?) and also, I am getting sometimes a "weird" head selection (the determiner selected as head, seems incorrect):

Is my usage of GrammaticalStructure/headWordNode is incorrect?

P.S. I've seen some code that uses 'HeadFinder' and 'node.headTerminal(hf, parent)' (here) but I thought that the other approach would be faster... so I am not sure if I can use the above or must use this approach