I'm playing around with a little web app in web.py, and am setting up a url to return a JSON object. What's the best way to convert a SQL table to JSON using python?

-

if you use Postgres, use the to_json capabilities, which outputs the data directly as a python object you can dump as a json string easily. – MrE May 04 '18 at 19:09

15 Answers

Here is a really nice example of a pythonic way to do that:

import json

import psycopg2

def db(database_name='pepe'):

return psycopg2.connect(database=database_name)

def query_db(query, args=(), one=False):

cur = db().cursor()

cur.execute(query, args)

r = [dict((cur.description[i][0], value) \

for i, value in enumerate(row)) for row in cur.fetchall()]

cur.connection.close()

return (r[0] if r else None) if one else r

my_query = query_db("select * from majorroadstiger limit %s", (3,))

json_output = json.dumps(my_query)

You get an array of JSON objects:

>>> json_output

'[{"divroad": "N", "featcat": null, "countyfp": "001",...

Or with the following:

>>> j2 = query_db("select * from majorroadstiger where fullname= %s limit %s",\

("Mission Blvd", 1), one=True)

you get a single JSON object:

>>> j2 = json.dumps(j2)

>>> j2

'{"divroad": "N", "featcat": null, "countyfp": "001",...

- 33,530

- 16

- 61

- 61

-

4Excellent answer! Read so much complicated "convert to object" type answers that this is simply stunning. THANK! – andig Nov 17 '12 at 11:02

-

1i completely agree with the above comment. this is a beautiful solution. – Dennis Bauszus May 29 '15 at 20:56

-

2This doesn't support JOINS on tables with a one to many relationship very well. You will get rows with repeated data. You need an ORM like the accepted answer to get something that will fix this problem. For simple SQL to JSON this will work fine though. – Banjocat Oct 27 '15 at 14:18

-

4I get the error `datetime.datetime(1941, 10, 31, 0, 0) is not JSON serializable` – Whitecat May 01 '17 at 17:49

-

you made me learn a new thing which is enumerate() function. it's amazing – Bentaiba Miled Basma Nov 28 '18 at 16:35

-

All you need is rows = cur.fetchall() r = [dict((cur.description[i][0], value) \ for i, value in enumerate(row)) for row in rows] – Abhinava Jan 24 '22 at 11:38

-

-

import sqlite3

import json

DB = "./the_database.db"

def get_all_users( json_str = False ):

conn = sqlite3.connect( DB )

conn.row_factory = sqlite3.Row # This enables column access by name: row['column_name']

db = conn.cursor()

rows = db.execute('''

SELECT * from Users

''').fetchall()

conn.commit()

conn.close()

if json_str:

return json.dumps( [dict(ix) for ix in rows] ) #CREATE JSON

return rows

Callin the method no json...

print get_all_users()

prints:

[(1, u'orvar', u'password123'), (2, u'kalle', u'password123')]

Callin the method with json...

print get_all_users( json_str = True )

prints:

[{"password": "password123", "id": 1, "name": "orvar"}, {"password": "password123", "id": 2, "name": "kalle"}]

- 7,066

- 5

- 39

- 43

Personally I prefer SQLObject for this sort of thing. I adapted some quick-and-dirty test code I had to get this:

import simplejson

from sqlobject import *

# Replace this with the URI for your actual database

connection = connectionForURI('sqlite:/:memory:')

sqlhub.processConnection = connection

# This defines the columns for your database table. See SQLObject docs for how it

# does its conversions for class attributes <-> database columns (underscores to camel

# case, generally)

class Song(SQLObject):

name = StringCol()

artist = StringCol()

album = StringCol()

# Create fake data for demo - this is not needed for the real thing

def MakeFakeDB():

Song.createTable()

s1 = Song(name="B Song",

artist="Artist1",

album="Album1")

s2 = Song(name="A Song",

artist="Artist2",

album="Album2")

def Main():

# This is an iterable, not a list

all_songs = Song.select().orderBy(Song.q.name)

songs_as_dict = []

for song in all_songs:

song_as_dict = {

'name' : song.name,

'artist' : song.artist,

'album' : song.album}

songs_as_dict.append(song_as_dict)

print simplejson.dumps(songs_as_dict)

if __name__ == "__main__":

MakeFakeDB()

Main()

- 29,332

- 18

- 93

- 152

-

thanks detly. this works well, though I hit an error having the list and dict named the same. just renamed the dict to `songs` and all working fine. – Aaron Moodie Jul 20 '10 at 23:54

-

Glad I could help. Weird that there was an error — as I see, they have (slightly) different names — could you have made a typo? – detly Jul 21 '10 at 00:45

-

Much more complicated than answer 4 and requires knowledge about the data- I prefer answer 4 for it's simplicity. – andig Nov 17 '12 at 11:03

-

@detly Could you please advice on this issue: https://stackoverflow.com/questions/55737528/python-retrieving-postgresql-query-results-as-formatted-json-values – arilwan Apr 18 '19 at 19:16

More information about how you'll be working with your data before transferring it would help a ton. The json module provides dump(s) and load(s) methods that'll help if you're using 2.6 or newer: http://docs.python.org/library/json.html.

-- EDITED --

Without knowing which libraries you're using I can't tell you for sure if you'll find a method like that. Normally, I'll process query results like this (examples with kinterbasdb because it's what we're currently working with):

qry = "Select Id, Name, Artist, Album From MP3s Order By Name, Artist"

# Assumes conn is a database connection.

cursor = conn.cursor()

cursor.execute(qry)

rows = [x for x in cursor]

cols = [x[0] for x in cursor.description]

songs = []

for row in rows:

song = {}

for prop, val in zip(cols, row):

song[prop] = val

songs.append(song)

# Create a string representation of your array of songs.

songsJSON = json.dumps(songs)

There are undoubtedly better experts out there who'll have list comprehensions to eliminate the need for written out loops, but this works and should be something you could adapt to whatever library you're retrieving records with.

- 46,865

- 9

- 101

- 111

-

the table is a list of mp3 files, including track name, artist and url, which is then used to populate a HTML5 Audio player. The player creates a playlist via a JSON object, so I'm just looking to pass the table to JSON. I've looked at the docs, but was just wondering if there was something along the lines of the ruby `to_json` method in python. – Aaron Moodie Jul 20 '10 at 02:44

-

@aaron-moodie - I've updated my answer with more example code. Hope that helps. – g.d.d.c Jul 20 '10 at 03:16

-

Nice approach but i had to make two changes in order to make it work for me. songs should be a dictionary: "songs = {}" and instead of songs.append(song) i used songs[song['Id']] = song. Otherwise json.dumps(songs) will halt with an error that songs ist not serializable. – May 30 '12 at 14:48

I knocked together a short script that dumps all data from all tables, as dicts of column name : value. Unlike other solutions, it doesn't require any info about what the tables or columns are, it just finds everything and dumps it. Hope someone finds it useful!

from contextlib import closing

from datetime import datetime

import json

import MySQLdb

DB_NAME = 'x'

DB_USER = 'y'

DB_PASS = 'z'

def get_tables(cursor):

cursor.execute('SHOW tables')

return [r[0] for r in cursor.fetchall()]

def get_rows_as_dicts(cursor, table):

cursor.execute('select * from {}'.format(table))

columns = [d[0] for d in cursor.description]

return [dict(zip(columns, row)) for row in cursor.fetchall()]

def dump_date(thing):

if isinstance(thing, datetime):

return thing.isoformat()

return str(thing)

with closing(MySQLdb.connect(user=DB_USER, passwd=DB_PASS, db=DB_NAME)) as conn, closing(conn.cursor()) as cursor:

dump = {

table: get_rows_as_dicts(cursor, table)

for table in get_tables(cursor)

}

print(json.dumps(dump, default=dump_date, indent=2))

- 15,359

- 7

- 71

- 70

nobody seem to have offered the option to get the JSON directly from the Postgresql server, using the postgres JSON capability https://www.postgresql.org/docs/9.4/static/functions-json.html

No parsing, looping or any memory consumption on the python side, which you may really want to consider if you're dealing with 100,000's or millions of rows.

from django.db import connection

sql = 'SELECT to_json(result) FROM (SELECT * FROM TABLE table) result)'

with connection.cursor() as cursor:

cursor.execute(sql)

output = cursor.fetchall()

a table like:

id, value

----------

1 3

2 7

will return a Python JSON Object

[{"id": 1, "value": 3},{"id":2, "value": 7}]

Then use json.dumps to dump as a JSON string

- 19,584

- 12

- 87

- 105

-

could this be done using only `psycopg2` and PostgreSQL `JSON` functions? – sc28 Dec 19 '17 at 13:32

-

-

Thanks for clarifying! I confirmed that it indeed works with psycopg2. As an example, I used the `json_agg()` function as described [here](http://johnatten.com/2015/04/22/use-postgres-json-type-and-aggregate-functions-to-map-relational-data-to-json/) – sc28 Dec 19 '17 at 16:52

Most simple way,

use json.dumps but if its datetime will require to parse datetime into json serializer.

here is mine,

import MySQLdb, re, json

from datetime import date, datetime

def json_serial(obj):

"""JSON serializer for objects not serializable by default json code"""

if isinstance(obj, (datetime, date)):

return obj.isoformat()

raise TypeError ("Type %s not serializable" % type(obj))

conn = MySQLdb.connect(instance)

curr = conn.cursor()

curr.execute("SELECT * FROM `assets`")

data = curr.fetchall()

print json.dumps(data, default=json_serial)

it will return json dump

one more simple method without json dumps, here get header and use zip to map with each finally made it as json but this is not change datetime into json serializer...

data_json = []

header = [i[0] for i in curr.description]

data = curr.fetchall()

for i in data:

data_json.append(dict(zip(header, i)))

print data_json

- 18,813

- 10

- 112

- 118

I would supplement The Demz answer with the psycopg2 version:

import psycopg2

import psycopg2.extras

import json

connection = psycopg2.connect(dbname=_cdatabase, host=_chost, port=_cport , user=_cuser, password=_cpassword)

cursor = connection.cursor(cursor_factory=psycopg2.extras.DictCursor) # This line allows dictionary access.

#select some records into "rows"

jsonout= json.dumps([dict(ix) for ix in rows])

- 1

- 1

- 95

- 2

- 7

-

`RealDictCursor` worked for me as-is, without any additional conversion logic: https://stackoverflow.com/a/9230872/352708 – Slava Semushin Sep 20 '22 at 04:30

For sqlite, it is possible to set a callable to connection.row_factory and change the format of query results to python dictionary object. See the documentation. Here is an example:

import sqlite3, json

def dict_factory(cursor, row):

d = {}

for idx, col in enumerate(cursor.description):

# col[0] is the column name

d[col[0]] = row[idx]

return d

def get_data_to_json():

conn = sqlite3.connect("database.db")

conn.row_factory = dict_factory

c = conn.cursor()

c.execute("SELECT * FROM table")

rst = c.fetchall() # rst is a list of dict

return jsonify(rst)

- 148

- 9

I'm Sorry to inform that the above code will fail to export date time fields in json. It will appear like this:

....

, 'TimeCreated': datetime.datetime(2019, 6, 17, 9, 2, 17), 'Created': 1,

....

Which make the output an invalid json.

-

This does not provide an answer to the question. Once you have sufficient [reputation](https://stackoverflow.com/help/whats-reputation) you will be able to [comment on any post](https://stackoverflow.com/help/privileges/comment); instead, [provide answers that don't require clarification from the asker](https://meta.stackexchange.com/questions/214173/why-do-i-need-50-reputation-to-comment-what-can-i-do-instead). - [From Review](/review/late-answers/31672701) – Shmiel May 06 '22 at 13:32

If you are using an MSSQL Server 2008 and above, you can perform your SELECT query to return json by using the FOR JSON AUTO clause E.G

SELECT name, surname FROM users FOR JSON AUTO

Will return Json as

[{"name": "Jane","surname": "Doe" }, {"name": "Foo","surname": "Samantha" }, ..., {"name": "John", "surname": "boo" }]

- 712

- 2

- 13

- 21

-

1This only seems to work for 2016 and above. https://stackoverflow.com/questions/35104842/incorrect-syntax-near-json-sql-server-2016 – Roger Perkins Apr 06 '21 at 14:42

After 10 years :) . Without list comprehension

Return a single row of values from a select query like below.

"select name,userid, address from table1 where userid = 1"

json output

{ name : "name1", userid : 1, address : "adress1, street1" }

Code

cur.execute(f"select name,userid, address from table1 where userid = 1 ")

row = cur.fetchone()

desc = list(zip(*cur.description))[0] #To get column names

rowdict = dict(zip(desc,row))

jsondict = jsonify(rowdict) #Flask jsonify

cur.description is a tuple of tuples as below. unzip and zip to combine column name with values

(('name', None, None, None, None, None, None), ('userid', None, None, None, None, None, None), ('address', None, None, None, None, None, None))

- 1,329

- 4

- 28

- 48

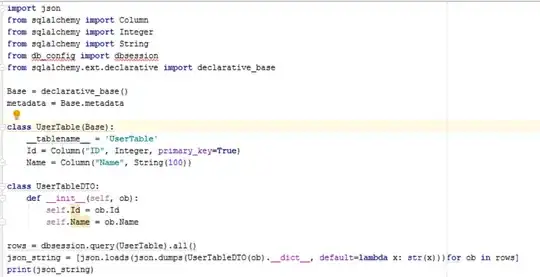

from sqlalchemy import Column

from sqlalchemy import Integer

from sqlalchemy import String

Base = declarative_base()

metadata = Base.metadata

class UserTable(Base):

__tablename__ = 'UserTable'

Id = Column("ID", Integer, primary_key=True)

Name = Column("Name", String(100))

class UserTableDTO:

def __init__(self, ob):

self.Id = ob.Id

self.Name = ob.Name

rows = dbsession.query(Table).all()

json_string = [json.loads(json.dumps(UserTableDTO(ob).__dict__, default=lambda x: str(x)))for ob in rows]

print(json_string)

One simple example for return SQL table as formatted JSON and fix error as he had @Whitecat

I get the error datetime.datetime(1941, 10, 31, 0, 0) is not JSON serializable

In that example you should use JSONEncoder.

import json

import pymssql

# subclass JSONEncoder

class DateTimeEncoder(JSONEncoder):

#Override the default method

def default(self, obj):

if isinstance(obj, (datetime.date, datetime.datetime)):

return obj.isoformat()

def mssql_connection():

try:

return pymssql.connect(server="IP.COM", user="USERNAME", password="PASSWORD", database="DATABASE")

except Exception:

print("\nERROR: Unable to connect to the server.")

exit(-1)

def query_db(query):

cur = mssql_connection().cursor()

cur.execute(query)

r = [dict((cur.description[i][0], value) for i, value in enumerate(row)) for row in cur.fetchall()]

cur.connection.close()

return r

def write_json(query_path):

# read sql from file

with open("../sql/my_sql.txt", 'r') as f:

sql = f.read().replace('\n', ' ')

# creating and writing to a json file and Encode DateTime Object into JSON using custom JSONEncoder

with open("../output/my_json.json", 'w', encoding='utf-8') as f:

json.dump(query_db(sql), f, ensure_ascii=False, indent=4, cls=DateTimeEncoder)

if __name__ == "__main__":

write_json()

# You get formatted my_json.json, for example:

[

{

"divroad":"N",

"featcat":null,

"countyfp":"001",

"date":"2020-08-28"

}

]

- 6,823

- 1

- 50

- 42

This simple code worked for me in Flask api:

@app.route('/output/json/')

def outputJson():

conn = sqlite3.connect('database.db')

conn.row_factory = sqlite3.Row

temp = conn.execute('SELECT * FROM users').fetchall()

conn.close()

result = [{k: item[k] for k in item.keys()} for item in temp]

return jsonify(result)

- 52

- 2

- 11