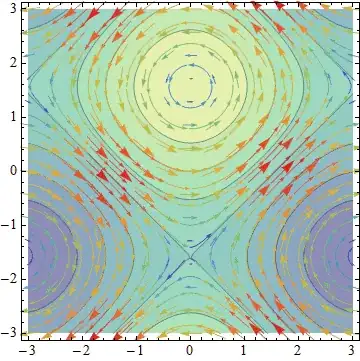

I'm trying to display a large number of images on a d3 display using T-SNE. The x and y coordinates are pre-calculated, the location on the svg area is adjusted using using translate/zoom.

At the moment they all display using the precalculated coordinates.

and they remain in place as zooming/panning.

I'm looking to use collision detection (like this example) to adjust the images locations slightly so that they don't overlap, but as much as possible maintain the rough global structure.

Here's my attempt so far https://gist.github.com/GerHarte/329af8ee5ffd8a1f87c5

With this it loads as in the image above, but as soon as I pan or zoom, all the points expand out hugely to a completely different location on the canvas and look like this, they don't seem to overlap, but they're extremely far apart.

Is there something wrong in my code or is there a better way to approach this?

Update: I followed Lar's answer here, with the slight addition of setting the raw data points to where Lar's code settles since the points are translated when zooming or panning. The results look great (see below), but for a larger number of points (5000+) it seems to crash before converging on a final result.

Are there any suggestions to improve the efficiency with this approach? Going to try the Multi-Foci Forced Directed approach.