I was playing with TPL, and trying to find out how big a mess I could make by reading and writing to the same Dictionary in parallel.

So I had this code:

private static void HowCouldARegularDicionaryDeadLock()

{

for (var i = 0; i < 20000; i++)

{

TryToReproduceProblem();

}

}

private static void TryToReproduceProblem()

{

try

{

var dictionary = new Dictionary<int, int>();

Enumerable.Range(0, 1000000)

.ToList()

.AsParallel()

.ForAll(n =>

{

if (!dictionary.ContainsKey(n))

{

dictionary[n] = n; //write

}

var readValue = dictionary[n]; //read

});

}

catch (AggregateException e)

{

e.Flatten()

.InnerExceptions.ToList()

.ForEach(i => Console.WriteLine(i.Message));

}

}

It was pretty messed up indeed, there were a lot of exceptions thrown, mostly about key does not exist, a few about index out of bound of array.

But after running the app for a while, it hangs, and the cpu percentage stays at 25%, the machine has 8 cores. So I assume that's 2 threads running at full capacity.

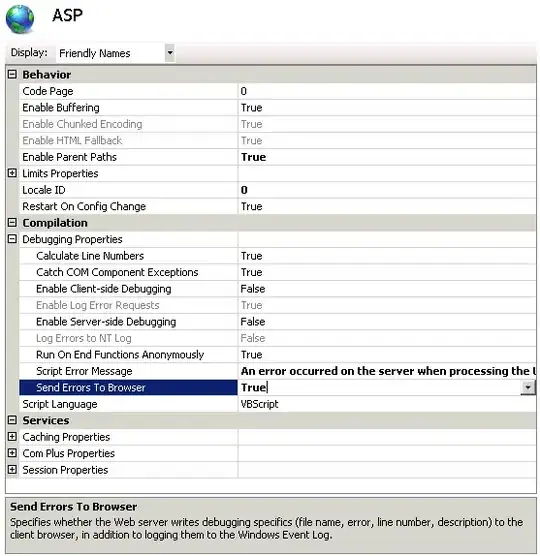

Then I ran dottrace on it, and got this:

It matches my guess, two threads running at 100%.

Both running the FindEntry method of Dictionary.

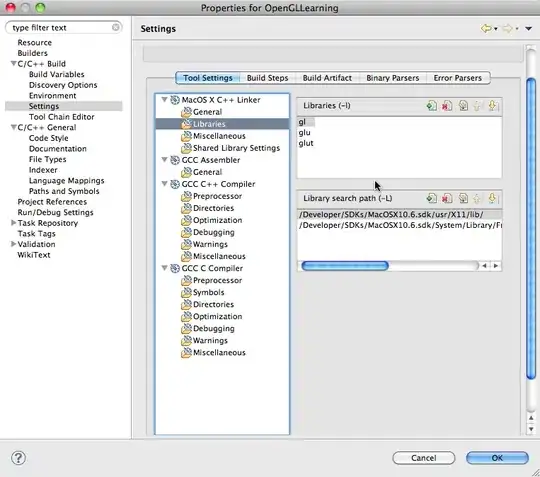

Then I ran the app again, with dottrace, this time the result is slightly different:

This time, one thread is running FindEntry, the other Insert.

My first intuition was that it's dead locked, but then I thought it could not be, there is only one shared resource, and it's not locked.

So how should this be explained?

ps: I am not looking to solve the problem, it could be fixed by using a ConcurrentDictionary, or by doing parallel aggregation. I am just looking for a reasonable explanation for this.