I'm creating a vision algorithm that is implemented in a Simulink S-function( which is c++ code). I accomplished every thing wanted except the alignment of the color and depth image.

My question is how can i make the 2 images correspond to each other. in other words how can i make a 3d image with opencv.

I know my question might be a little vague so i will include my code which will explain the question

#include "opencv2/opencv.hpp"

using namespace cv;

int main(int argc, char** argv)

{

// reading in the color and depth image

Mat color = imread("whitepaint_col.PNG", CV_LOAD_IMAGE_UNCHANGED);

Mat depth = imread("whitepaint_dep.PNG", CV_LOAD_IMAGE_UNCHANGED);

// show bouth the color and depth image

namedWindow("color", CV_WINDOW_AUTOSIZE);

imshow("color", color);

namedWindow("depth", CV_WINDOW_AUTOSIZE);

imshow("depth", depth);

// thershold the color image for the color white

Mat onlywhite;

inRange(color, Scalar(200, 200, 200), Scalar(255, 255, 255), onlywhite);

//display the mask

namedWindow("onlywhite", CV_WINDOW_AUTOSIZE);

imshow("onlywhite", onlywhite);

// apply the mask to the depth image

Mat nocalibration;

depth.copyTo(nocalibration, onlywhite);

//show the result

namedWindow("nocalibration", CV_WINDOW_AUTOSIZE);

imshow("nocalibration", nocalibration);

waitKey(0);

destroyAllWindows;

return 0;

}

As can be seen in the output of my program when i apply the onlywhite mask to the depth image the quad copter body does not consist out of 1 color. The reason for this is that there is a miss match between the 2 images.

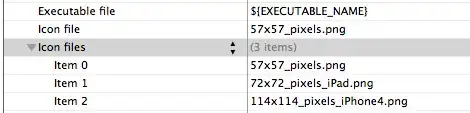

I know that i need calibration parameters of my camera and i got these from the last person who worked with this setup. Did the calibration in Matlab and this resulted in the following.

I have spent allot of time reading the following opencv page about Camera Calibration and 3D Reconstruction ( cannot include the link because of stack exchange lvl)

But i cannot for the life of me figure out how i could accomplish my goal of adding the correct depth value to each colored pixel.

I tried using reprojectImageTo3D() but i cannot figure out the Q matrix. i also tried allot of other functions from that page but i cannot seem to get my inputs correct.