Are there any general rules for how many layers/neurons are needed to solve a given problem?

I understand that 1 hidden layer is sufficient for patterns that are linearly separable (such as the AND logic gate), and 2 hidden layers for patterns that are not (XOR gate). I would like to make one that can learn to separate 3 types of object given 2 parameters.

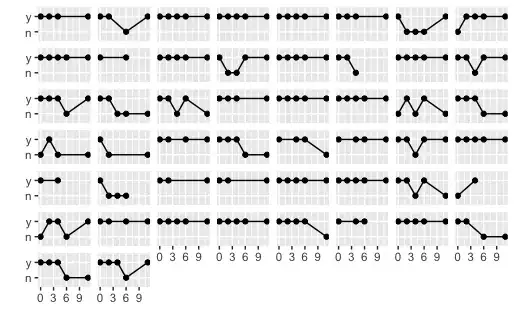

I have attached an image for clarity.