I am working on a large windows desktop application that stores large amount of data in form of a project file. We have our custom ORM and serialization to efficiently load the object data from CSV format. This task is performed by multiple threads running in parallel processing multiple files. Our large project can contain million and likely more objects with many relationships between them.

Recently I got tasked to improve the project open performance which deteriorated for very large projects. Upon profiling it turned out that most of the time spent can be attributed to garbage collection (GC).

My theory is that due to large number of very fast allocations the GC is starved, postponed for a very long time and then when it finally kicks in it takes a very long time to the job. That idea was further confirmed by two contradicting facts:

- Optimizing deserialization code to work faster only made things worse

- Inserting

Thread.Sleepcalls at strategic places made load go faster

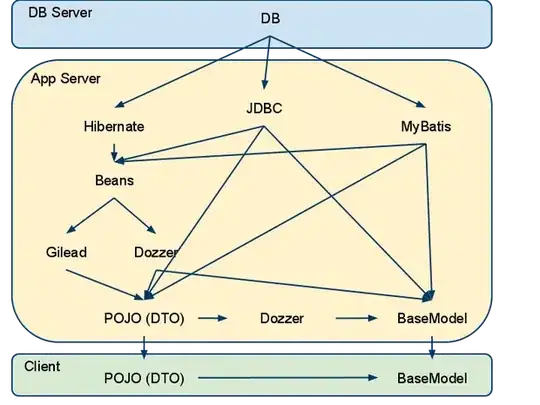

Example of slow load with 7 generation 2 collections and huge % of time in GC is below.

Example of fast load with sleep periods in the code to allow GC some time is below. In this case wee have 19 generation 2 collections and also more than double the number of generation 0 and generation 1 collections.

So, my question is how to prevent this GC starvation? Adding Thread.Sleep looks silly and it is very difficult to guess the right amount of milliseconds in the right place. My other idea would be to use GC.Collect, but that also poses the difficulty of how many and where to put them. Any other ideas?