I am trying to create a ROC curve for an SVM and here is the code I have used :

#learning from training

#tuned <- tune.svm(y~., data=train, gamma = 10^(-6:-1), cost = 10^(1:2))

summary(tuned)

svmmodel<-svm(y~., data=train, method="C-classification",

kernel="radial", gamma = 0.01, cost = 100,cross=5, probability=TRUE)

svmmodel

#predicting the test data

svmmodel.predict<-predict(svmmodel,subset(test,select=-y),decision.values=TRUE)

svmmodel.probs<-attr(svmmodel.predict,"decision.values")

svmmodel.class<-predict(svmmodel,test,type="class")

svmmodel.labels<-test$y

#analyzing result

svmmodel.confusion<-confusion.matrix(svmmodel.labels,svmmodel.class)

svmmodel.accuracy<-prop.correct(svmmodel.confusion)

#roc analysis for test data

svmmodel.prediction<-prediction(svmmodel.probs,svmmodel.labels)

svmmodel.performance<-performance(svmmodel.prediction,"tpr","fpr")

svmmodel.auc<-performance(svmmodel.prediction,"auc")@y.values[[1]]

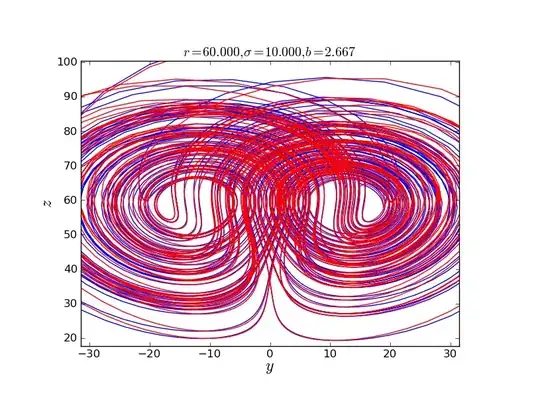

but the problem that the curve ROC is somthing like this :