I've narrowed a performance issue to a particular SQLite query that looks like this:

select *

from test

where (?1 is null or ident = ?1)

and (?2 is null or name = ?2)

and (?3 is null or region = ?3);

This allows any subset of the input parameters (there are more than three) with a single query. Unfortunately, using explain query plan on this yields:

1|0|0|SCAN TABLE test

So SQLite is reading through the entire table no matter what's passed in.

Changing the query to from table indexed by test_idx causes it to fail: Error: no query solution.

Removing the ?1 is null or yields a much more favorable query:

1|0|0|SEARCH TABLE test USING INDEX idx (ident=?)

However, note that only one index can be used. All matches for ident will be scanned looking for matches to other fields. Using a single index that contains all the match fields avoids this:

0|0|0|SEARCH TABLE test USING INDEX test_idx_3 (ident=? AND region=? AND name=?)

It seems reasonable to think that SQLite's query planner would be able to either eliminate or simplify each condition to a simple indexed column check, but apparently that is not the case, as query optimization happens before parameter binding, and no further simplification occurs.

The obvious solution, is to have 2^N separate queries and select the appropriate one at runtime based on which combination of inputs are to be checked. For N=2 or 3 that might be acceptable, but it's absolutely out of the question in this case.

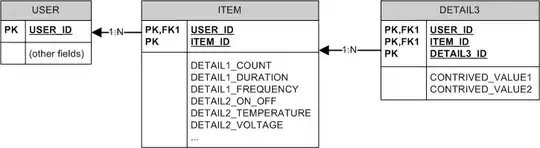

There are, of course, a number of ways to re-organize the database that would make this type of query more reasonable, but assume that's also not practical.

So, how can I search any subset of columns in a table without losing the performance benefit of indexes on those columns?