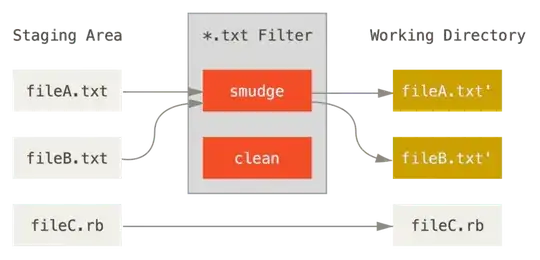

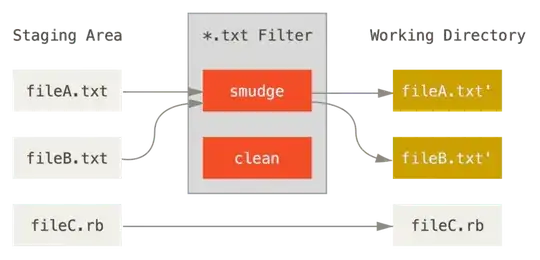

I initially thought about a filter driver (see Pro Book), which would:

- on the smudge step save your files content

- on the clean step would restore your files content.

But that is not a good solution since those scripts are about stateless file content transformation (see this SO answer).

You can try enforcing a save/restore mechanism in hooks (see the same SO answer), but note that it will be local to your repo (it will protect your files in your repo only, hooks aren't pushed)

You can also use:

git update-index --assume-unchanged file

See "With git, temporary exclude a changed tracked file from commit in command line", again a local protection only. That will protect them against external push to your repo ("import"), but if you publish them ("export"), than can be modified on the client side.