Could someone explain how the Quality column in the xgboost R package is calculated in the xgb.model.dt.tree function?

In the documentation it says that Quality "is the gain related to the split in this specific node".

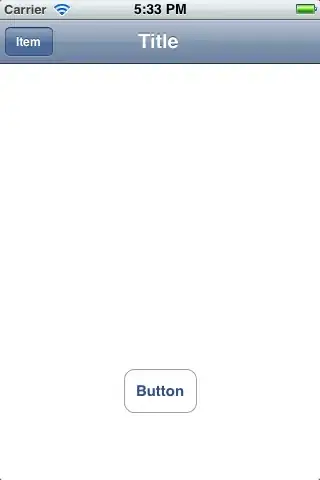

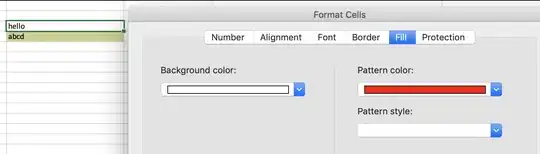

When you run the following code, given in the xgboost documentation for this function, Quality for node 0 of tree 0 is 4000.53, yet I calculate the Gain as 2002.848

data(agaricus.train, package='xgboost')

train <- agarics.train

X = train$data

y = train$label

bst <- xgboost(data = train$data, label = train$label, max.depth = 2,

eta = 1, nthread = 2, nround = 2,objective = "binary:logistic")

xgb.model.dt.tree(agaricus.train$data@Dimnames[[2]], model = bst)

p = rep(0.5,nrow(X))

L = which(X[,'odor=none']==0)

R = which(X[,'odor=none']==1)

pL = p[L]

pR = p[R]

yL = y[L]

yR = y[R]

GL = sum(pL-yL)

GR = sum(pR-yR)

G = sum(p-y)

HL = sum(pL*(1-pL))

HR = sum(pR*(1-pR))

H = sum(p*(1-p))

gain = 0.5 * (GL^2/HL+GR^2/HR-G^2/H)

gain

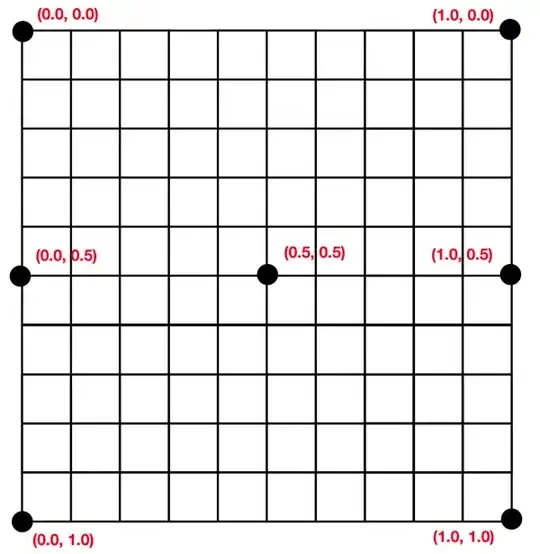

I understand that Gain is given by the following formula:

Since we are using log loss, G is the sum of p-y and H is the sum of p(1-p) - gamma and lambda in this instance are both zero.

Can anyone identify where I am going wrong?