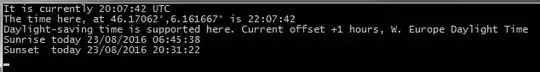

I am trying to set up a hash table (in C++, using the unordered_map container) which is to contain 1875 integer items which are randomly distributed within the interval 0 to 4891. Now my problem is that the distribution within this interval is not uniform but rather looks like this:

where each of the 1875 random integers is plotted as a point with x corresponding to the integer value and y = 1 (so as to visualize the distribution).

Clearly the distribution is such that there are wide gaps where no random integer lie. If I use the identity function as my hash function (i.e. use the random integers themselves as hash values), I get 714 empty buckets, 814 buckets with a single element, 499 buckets with 2 elements and 21 buckets with 3 or more elements.

I'm using the Intel C++ compiler and it uses powers of 2 for the number of buckets in the hash table. In my case right now the hash table has 2^11 = 2048 buckets.

What would be a good hash function for this case? My understanding is that a good hash function in this case would get rid of these clustered integer numbers and shuffle them around in a more uniform distribution, but how could one achieve that?