I have my own. It's pretty accurate and sort of fast. It works off of a theorem I built around quartic convergence. It's really interesting, and you can see the equation and how fast it can make my natural log approximation converge here: https://www.desmos.com/calculator/yb04qt8jx4

Here's my arccos code:

function acos(x)

local a=1.43+0.59*x a=(a+(2+2*x)/a)/2

local b=1.65-1.41*x b=(b+(2-2*x)/b)/2

local c=0.88-0.77*x c=(c+(2-a)/c)/2

return (8*(c+(2-a)/c)-(b+(2-2*x)/b))/6

end

A lot of that is just square root approximation. It works really well, too, unless you get too close to taking a square root of 0. It has an average error (excluding x=0.99 to 1) of 0.0003. The problem, though, is that at 0.99 it starts going to shit, and at x=1, the difference in accuracy becomes 0.05. Of course, this could be solved by doing more iterations on the square roots (lol nope) or, just a little thing like, if x>0.99 then use a different set of square root linearizations, but that makes the code all long and ugly.

If you don't care about accuracy so much, you could just do one iteration per square root, which should still keep you somewhere in the range of 0.0162 or something as far as accuracy goes:

function acos(x)

local a=1.43+0.59*x a=(a+(2+2*x)/a)/2

local b=1.65-1.41*x b=(b+(2-2*x)/b)/2

local c=0.88-0.77*x c=(c+(2-a)/c)/2

return 8/3*c-b/3

end

If you're okay with it, you can use pre-existing square root code. It will get rid of the the equation going a bit crazy at x=1:

function acos(x)

local a = math.sqrt(2+2*x)

local b = math.sqrt(2-2*x)

local c = math.sqrt(2-a)

return 8/3*d-b/3

end

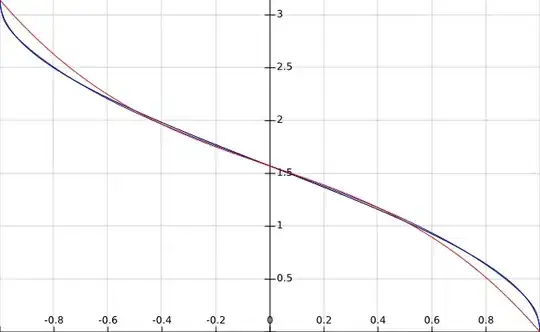

Frankly, though, if you're really pressed for time, remember that you could linearize arccos into 3.14159-1.57079x and just do:

function acos(x)

return 1.57079-1.57079*x

end

Anyway, if you want to see a list of my arccos approximation equations, you can go to https://www.desmos.com/calculator/tcaty2sv8l I know that my approximations aren't the best for certain things, but if you're doing something where my approximations would be useful, please use them, but try to give me credit.