I am trying to construct a lasso regression prediction model. I encoded all my categorical integer features using a one-hot aka one-of-K scheme using OneHotEncoder in scikit-learn. Based on the result, only 51 parameters actually influence the prediction model. I want to investigate these parameters, but they are encoded as described above. Do you know how can I extract which categorical integer feature corresponds to which one hot encoded array? Thanks!

Asked

Active

Viewed 1,256 times

0

-

1Check a `feature_indices_` attribute. – hellpanderr Nov 28 '15 at 10:55

-

1Possible duplicate of [How to reverse sklearn.OneHotEncoder transform to recover original data?](http://stackoverflow.com/questions/22548731/how-to-reverse-sklearn-onehotencoder-transform-to-recover-original-data) – Mack Mar 11 '17 at 20:52

3 Answers

0

Using the active_features_, feature_indices_, and n_values_ attributes of sklearn.preprocessing.OneHotEncoder, a vector of the categorical features ordered by their 'position' in the one-hot array can be created as follows:

import numpy as np

from sklearn import preprocessing

enc = preprocessing.OneHotEncoder()

enc.fit([[0, 0, 3], [1, 1, 0], [0, 2, 1], [1, 0, 2]])

enc.active_features_ - np.repeat(enc.feature_indices_[:-1], enc.n_values_)

# array([0, 1, 0, 1, 2, 0, 1, 2, 3], dtype=int64)

Also, the original data can be returned from the one-hot array as follows:

x = enc.transform([[0, 1, 1], [1, 2, 3]]).toarray()

# array([[ 1., 0., 0., 1., 0., 0., 1., 0., 0.],

# [ 0., 1., 0., 0., 1., 0., 0., 0., 1.]])

cond = x > 0

[enc.active_features_[c.ravel()] - enc.feature_indices_[:-1] for c in cond]

# [array([0, 1, 1], dtype=int64), array([1, 2, 3], dtype=int64)]

BMW

- 509

- 3

- 15

0

This works:

import pickle

with open('model.pickle', 'rb') as handle:

one_hot_categories = pickle.load(handle)

print(one_hot_categories.categories_)

Cathy

- 367

- 1

- 3

- 16

-1

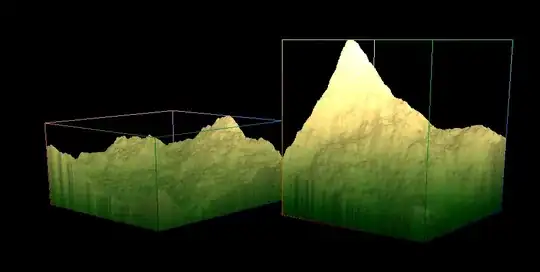

I designed ple to enhance sklearn's Pipeline and FeatureUnion, by which we can also backtrack categorical features after one-hot-encoding or other preprocessing steps. Furthermore, we can 'draw' the transform by GraphX: for example,

You can find ple on my Github page.

Fabio says Reinstate Monica

- 5,271

- 9

- 40

- 61