I am trying out the PyBrains maze example

my setup is:

envmatrix = [[...]]

env = Maze(envmatrix, (1, 8))

task = MDPMazeTask(env)

table = ActionValueTable(states_nr, actions_nr)

table.initialize(0.)

learner = Q()

agent = LearningAgent(table, learner)

experiment = Experiment(task, agent)

for i in range(1000):

experiment.doInteractions(N)

agent.learn()

agent.reset()

Now, I am not confident in the results that I am getting

The bottom-right corner (1, 8) is the absorbing state

I have put an additional punishment state (1, 7) in mdp.py:

def getReward(self):

""" compute and return the current reward (i.e. corresponding to the last action performed) """

if self.env.goal == self.env.perseus:

self.env.reset()

reward = 1

elif self.env.perseus == (1,7):

reward = -1000

else:

reward = 0

return reward

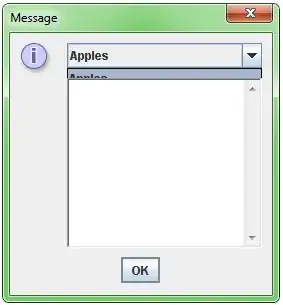

Now, I do not understand how, after 1000 runs and 200 interaction during every run, agent thinks that my punishment state is a good state (you can see the square is white)

I would like to see the values for every state and policy after the final run. How do I do that? I have found that this line table.params.reshape(81,4).max(1).reshape(9,9) returns some values, but I am not sure whether those correspond to values of the value function