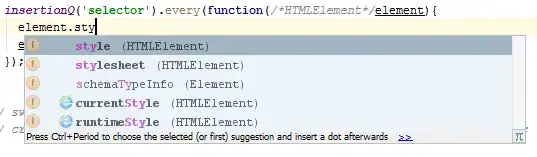

I just wrote this very simple handwritten digit recoginition. Here is 8kb archive with the following code + ten .PNG image files. It works:  is well recognized as

is well recognized as  .

.

In short, each digit of the database (50x50 pixels = 250 coefficients) is summarized into a 10-coefficient-vector (by keeping the 10 biggest singular values, see Low-rank approximation with SVD).

Then for the digit to be recognized, we minimize the distance with the digits in the database.

from scipy import misc

import numpy as np

import matplotlib.pyplot as plt

digits = []

for i in range(11):

M = misc.imread(str(i) + '.png', flatten=True)

U, s, V = np.linalg.svd(M, full_matrices=False)

s[10:] = 0 # keep the 10 biggest singular values only, discard others

S = np.diag(s)

M_reduced = np.dot(U, np.dot(S, V)) # reconstitution of image with 10 biggest singular values

digits.append({'original': M, 'singular': s[:10], 'reduced': M_reduced})

# each 50x50 pixels digit is summarized into a vector of 10 coefficients : the 10 biggest singular values s[:10]

# 0.png to 9.png = all the digits (for machine training)

# 10.png = the digit to be recognized

toberecognizeddigit = digits[10]

digits = digits[:10]

# we find the nearest-neighbour by minimizing the distance between singular values of toberecoginzeddigit and all the digits in database

recognizeddigit = min(digits[:10], key=lambda d: sum((d['singular']-toberecognizeddigit['singular'])**2))

plt.imshow(toberecognizeddigit['reduced'], interpolation='nearest', cmap=plt.cm.Greys_r)

plt.show()

plt.imshow(recognizeddigit['reduced'], interpolation='nearest', cmap=plt.cm.Greys_r)

plt.show()

Question:

The code works (you can run the code in the ZIP archive), but how can we improve it to have better results? (mostly math techniques I imagine).

For example in my tests, 9 and 3 are sometimes confused with each other.