I am trying to encode an .h264 video by using MediaCodec and Camera (onPreviewFrame). I got stuck converting color space from YV12 (from camera) to COLOR_FormatYUV420SemiPlanar (needed by the encoder).

Edit: I noticed this can be a bug on MediaCodec since the following code works on other devices:

public static byte[] YV12toYUV420PackedSemiPlanar(final byte[] input, final byte[] output, final int width, final int height) {

/*

* COLOR_TI_FormatYUV420PackedSemiPlanar is NV12

* We convert by putting the corresponding U and V bytes together (interleaved).

*/

final int frameSize = width * height;

final int qFrameSize = frameSize / 4;

System.arraycopy(input, 0, output, 0, frameSize); // Y

for (int i = 0; i < qFrameSize; i++) {

output[frameSize + i * 2] = input[frameSize + i + qFrameSize]; // Cb (U)

output[frameSize + i * 2 + 1] = input[frameSize + i]; // Cr (V)

}

return output;

}

This is the result I get (seems like color bits have some offset):

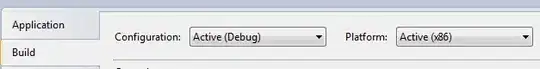

Edit 2: Frame size is 1280x720, device is Samsung s5(SM-G900V) with OMX.qcom.video.encoder.avc running Android Lollipop 5.0 (API 21).

Note: I know about COLOR_FormatSurface but I need to make this work on API 16.