I’m working on a project where I will need to use Kinect One, I do not have that much experience with that kind of sensors(neither this new one nor with old kinect). Therefore I started playing around with it and I successfully managed to acquire images using the Kinect SDK v2.0 and opencv. Now what I would like to do is to overlay the two views from the rgb sensor and the ir/depth sensor in order to create a rgb-d image. Kinect SDK provides a class for that reason, named CoordinateMapper, but does not provide sufficient results (speed & quality) plus I am facing the problem that @api55 describes here. Therefore I would like to try and manually do it, plus for learning reasons. The color camera has a full HD resolution (1920x1080 pixel) and the depth/infrared image is 512x424 pixel, respectively. Plus the two sensors have different aspect ratio. Searching around I found out that the proper way is first to calibrate the two sensors and then apply any mapping between the two views.

I am gonna be using opencv for both calibration and mapping procedures. For the calibration I noticed that people here and in general propose that it would be better if I first individually calibrate the two sensors using the calibrate() function and then use the extracted cameraMatrix/intrinsic and distortion coefficients/distCoeffs values to the stereoCalibrate() function in order to obtain the rotation(R) and translation(T) matrices plus some others, e.g. R1,R2,P1,P2,Q,etc..., needed later for the mapping. Using an asymmetric circles grid pattern I managed to calibrate individually each sensor in its initial resolution and aspect ratio obtaining a reprojection error, rms of 0.3 and 0.1 for rgb and ir sensors respectively. Passing the extracted values to the stereoCalibrate() with CV_CALIB_USE_INTRINSIC_GUESS and CV_CALIB_FIX_INTRINSIC I managed to obtain R and T matrices with a reprojection error, rms = ~0.4 which seems also to be good.

Question 1

However before I continue, here I have a question the stereoCalibrate() has a parameter about the image size, I used the size from the ir camera since later on I would like to use this resolution in order to map the two views. On the other hand I read somewhere that it does not play any role since it is only useful for initialization in intrinsic camera calibration (according to openCV documentation), which is not used if stereoCalibrate() is called with parameter CALIB_FIX_INTRINSIC is that correct? Moreover, do you think that I should resize the high resolution camera to the size of the smaller one since I am gonna use the latter one later for the mapping. I guess I will need to apply some cropping as well due to the different aspect ration, right? or it is not necessary.

Continuing without resizing and cropping at the end I managed to obtain all the necessary information needed for the undistortion and rectification from the stereoRectify() function. So, I have successfully obtained the cameraMatrix, distCoeffs, R, T, R1, R2, P1, P2, Q matrices. Trying now to undistort/rectify and remap the two view in their initial sizes I obtained the following result:

which seems to be quite good except the ring around the first image, which I do not know what it is, if someone could explain me I would be grateful. My guess is that most likely is a result of bad calibration? Also, in the initUndistortRectifyMap() I am using the big resolution sensor's size, otherwise I am getting something completely wrong, I do not understand that as well if someone could explain.

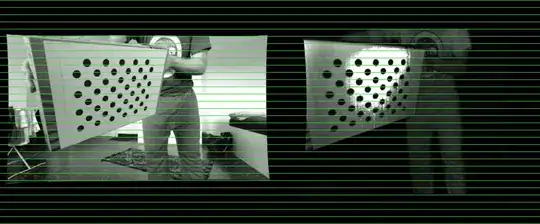

Now if I resize the big resolution image before any calibration (no cropping applied) I get much better results:

of course as you can see due to the aspect ratio the images are quite different.

Question 2

So again continuing from question 1 what is the proper way to go, can I use the images in their initial size or I should resize them, should I apply also cropping? What am I doing wrong in the procedure?

Question 3

Considering that I will manage to obtain the two views after calibration/undistortion/rectification how I do overlay them? I have I have both rgb and depth images, cameraMatric and distCoeffs from both sensors and R, T, R1, R2, P1, P2, Q matrices. I read that I can use cv::projectPoints but I couldn't find an example. I would appreciate if someone cold give me some hints.

Thanks in advance.

p.s. I can provide some code if it is necessary, it is a bit messy for the moment but still if it helps no problem by me.