Rather than having multiple worker processes, you can use use the WSGIDaemonProcess directive to have multiple worker threads which all run in a single process. That way, all the threads can share the same DB connection mapping.

With something like this in your apache config...

# mydomain.com.conf

<VirtualHost *:80>

ServerName mydomain.com

ServerAdmin webmaster@mydomain.com

<Directory />

Require all granted

</Directory>

WSGIDaemonProcess myapp processes=1 threads=50 python-path=/path/to/django/root display-name=%{GROUP}

WSGIProcessGroup myapp

WSGIScriptAlias / /path/to/django/root/myapp/wsgi.py

</VirtualHost>

...you can then use something as simple as this in your Django app...

# views.py

import thread

from django.http import HttpResponse

# A global variable to hold the connection mappings

DB_CONNECTIONS = {}

# Fake up this "strangedb" module

class strangedb(object):

class connection(object):

def query(self, *args):

return 'Query results for %r' % args

@classmethod

def connect(cls, *args):

return cls.connection()

# View for homepage

def home(request, username='bob'):

# Remember thread ID

thread_info = 'Thread ID = %r' % thread.get_ident()

# Connect only if we're not already connected

if username in DB_CONNECTIONS:

strangedb_connection = DB_CONNECTIONS[username]

db_info = 'We reused an existing connection for %r' % username

else:

strangedb_connection = strangedb.connect(username)

DB_CONNECTIONS[username] = strangedb_connection

db_info = 'We made a connection for %r' % username

# Fake up some query

results = strangedb_connection.query('SELECT * FROM my_table')

# Fake up an HTTP response

text = '%s\n%s\n%s\n' % (thread_info, db_info, results)

return HttpResponse(text, content_type='text/plain')

...which, on the first hit, produces...

Thread ID = 140597557241600

We made a connection for 'bob'

Query results for 'SELECT * FROM my_table'

...and, on the second...

Thread ID = 140597145999104

We reused an existing connection for 'bob'

Query results for 'SELECT * FROM my_table'

Obviously, you'll need to add something to tear down the DB connections when they're no longer required, but it's tough to know the best way to do that without more info about how your app is supposed to work.

Update #1: Regarding I/O multiplexing vs multithreading

I worked with threads twice in my live and each time it was a

nightmare. A lot of time was wasted on debugging non reproducible

problems. I think an event-driven and a non-blocking I/O architecture

might be more solid.

A solution using I/O multiplexing might be better, but would be more complex, and would also require your "strangedb" library to support it, i.e. it would have to be able to handle EAGAIN/EWOULDBLOCK and have the capacity to retry the system call when necessary.

Multithreading in Python is far less dangerous than in most other languages, due to Python's GIL, which, in essence, makes all Python bytecode thread-safe.

In practice, threads only run concurrently when the underlying C code uses the Py_BEGIN_ALLOW_THREADS macro, which, with its counterpart, Py_END_ALLOW_THREADS, are typically wrapped around system calls, and CPU-intensive operations.

The upside of this is that it's almost impossible to have a thread collision in Python code, although the downside is that it won't always make optimal use of multiple CPU cores on a single machine.

The reason I suggest the above solution is that it's relatively simple, and would require minimal code changes, but there may be a better option if you could elaborate more on your "strangedb" library. It seems rather odd to have a DB which requires a separate network connection per concurrent user.

Update #2: Regarding multiprocessing vs multithreading

...the GIL limitations around threading seem to be a bit of an issue.

Isn't this one of the reasons why the trend is to use separate

processes instead?

That's quite possibly the main reason why Python's multiprocessing module exists, i.e. to provide concurrent execution of Python bytecode across multiple CPU cores, although there is an undocumented ThreadPool class in that module, which uses threads rather than processes.

The "GIL limitations" would certainly be problematic in cases where you really need to exploit every single CPU cycle on every CPU core, e.g. if you were writing a computer game which had to render 60 frames per second in high-definition.

Most web-based services, however, are likely to spend most of their time waiting for something to happen, e.g. network I/O or disk I/O, which Python threads will allow to occur concurrently.

Ultimately, it's trade-off between performance and maintainability, and given that hardware is usually much cheaper than a developer's time, favoring maintainability over performance is usually more cost-effective.

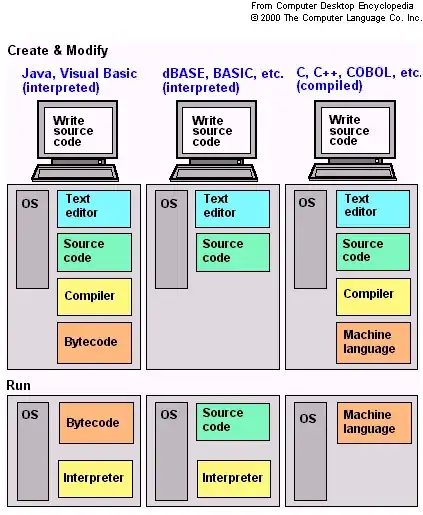

Frankly, the moment you decide to use a virtual machine language, such as Python, instead of a language which compiles into real machine code, such as C, you're already saying that you're prepared to sacrifice some performance in exchange for convenience.

See also The C10K problem for a comparison of techniques for scaling web-based services.