I'm trying to get item fields info from different pages using scrapy.

What I am trying to do:

- main_url > scrape all links from this page > go to each link

- from each link > scrape info, put info in items list and go to another link

- from another link > scrape info and put info in the same items list

- Go to next each link...repeat steps 2 - 4

when all links are done go to next page and repeat steps 1 - 3

I found some information from below but, I still can't get the results I want:

How can i use multiple requests and pass items in between them in scrapy python

Goal: to get the below layout results

What I've done is below

My item class

from scrapy.item import Item, Field

class myItems(Item):

info1 = Field()

info2 = Field()

info3 = Field()

info4 = Field()

My spider class

from scrapy.http import Request

from myProject.items import myItems

class mySpider(scrapy.Spider):

name = 'spider1'

start_urls = ['main_link']

def parse(self, response):

items = []

list1 = response.xpath().extract() #extract all info from here

list2 = response.xpath().extract() #extract all info from here

for i,j in zip(list1, list2):

link1 = 'http...' + i

request = Request(link1, self.parseInfo1, dont_filter =True)

request.meta['item'] = items

yield request

link2 = 'https...' + j

request = Request(link2, self.parseInfo2, meta={'item':items}, dont_filter = True)

# Code for crawling to next page

def parseInfo1(self, response):

item = myItems()

items = response.meta['item']

item[info1] = response.xpath().extract()

item[info2] = response.xpath().extract()

items.append(item)

return items

def parseInfo2(self, response):

item = myItems()

items = response.meta['item']

item[info3] = response.xpath().extract()

item[info4] = response.xpath().extract()

items.append(item)

return items

I executed the spider by typing this on the terminal:

> scrapy crawl spider1 -o filename.csv -t csv

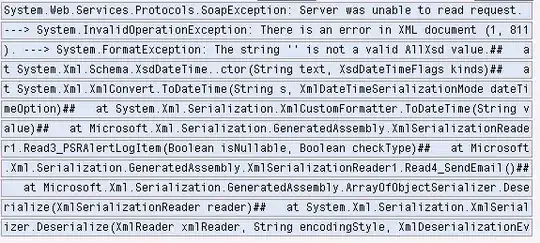

I got the results for all the fields, but they are not in the right order. My csv file looks like this:

Does anyone know how to get the results like in my "Goal" above? I appreciate the help.

Thanks