Code1:

vzeroall mov rcx, 1000000 startLabel1: vfmadd231ps ymm0, ymm0, ymm0 vfmadd231ps ymm1, ymm1, ymm1 vfmadd231ps ymm2, ymm2, ymm2 vfmadd231ps ymm3, ymm3, ymm3 vfmadd231ps ymm4, ymm4, ymm4 vfmadd231ps ymm5, ymm5, ymm5 vfmadd231ps ymm6, ymm6, ymm6 vfmadd231ps ymm7, ymm7, ymm7 vfmadd231ps ymm8, ymm8, ymm8 vfmadd231ps ymm9, ymm9, ymm9 vpaddd ymm10, ymm10, ymm10 vpaddd ymm11, ymm11, ymm11 vpaddd ymm12, ymm12, ymm12 vpaddd ymm13, ymm13, ymm13 vpaddd ymm14, ymm14, ymm14 dec rcx jnz startLabel1Code2:

vzeroall mov rcx, 1000000 startLabel2: vmulps ymm0, ymm0, ymm0 vmulps ymm1, ymm1, ymm1 vmulps ymm2, ymm2, ymm2 vmulps ymm3, ymm3, ymm3 vmulps ymm4, ymm4, ymm4 vmulps ymm5, ymm5, ymm5 vmulps ymm6, ymm6, ymm6 vmulps ymm7, ymm7, ymm7 vmulps ymm8, ymm8, ymm8 vmulps ymm9, ymm9, ymm9 vpaddd ymm10, ymm10, ymm10 vpaddd ymm11, ymm11, ymm11 vpaddd ymm12, ymm12, ymm12 vpaddd ymm13, ymm13, ymm13 vpaddd ymm14, ymm14, ymm14 dec rcx jnz startLabel2Code3 (same as Code2 but with long VEX prefix):

vzeroall mov rcx, 1000000 startLabel3: byte 0c4h, 0c1h, 07ch, 059h, 0c0h ;long VEX form vmulps ymm0, ymm0, ymm0 byte 0c4h, 0c1h, 074h, 059h, 0c9h ;long VEX form vmulps ymm1, ymm1, ymm1 byte 0c4h, 0c1h, 06ch, 059h, 0d2h ;long VEX form vmulps ymm2, ymm2, ymm2 byte 0c4h, 0c1h, 06ch, 059h, 0dbh ;long VEX form vmulps ymm3, ymm3, ymm3 byte 0c4h, 0c1h, 05ch, 059h, 0e4h ;long VEX form vmulps ymm4, ymm4, ymm4 byte 0c4h, 0c1h, 054h, 059h, 0edh ;long VEX form vmulps ymm5, ymm5, ymm5 byte 0c4h, 0c1h, 04ch, 059h, 0f6h ;long VEX form vmulps ymm6, ymm6, ymm6 byte 0c4h, 0c1h, 044h, 059h, 0ffh ;long VEX form vmulps ymm7, ymm7, ymm7 vmulps ymm8, ymm8, ymm8 vmulps ymm9, ymm9, ymm9 vpaddd ymm10, ymm10, ymm10 vpaddd ymm11, ymm11, ymm11 vpaddd ymm12, ymm12, ymm12 vpaddd ymm13, ymm13, ymm13 vpaddd ymm14, ymm14, ymm14 dec rcx jnz startLabel3Code4 (same as Code1 but with xmm registers):

vzeroall mov rcx, 1000000 startLabel4: vfmadd231ps xmm0, xmm0, xmm0 vfmadd231ps xmm1, xmm1, xmm1 vfmadd231ps xmm2, xmm2, xmm2 vfmadd231ps xmm3, xmm3, xmm3 vfmadd231ps xmm4, xmm4, xmm4 vfmadd231ps xmm5, xmm5, xmm5 vfmadd231ps xmm6, xmm6, xmm6 vfmadd231ps xmm7, xmm7, xmm7 vfmadd231ps xmm8, xmm8, xmm8 vfmadd231ps xmm9, xmm9, xmm9 vpaddd xmm10, xmm10, xmm10 vpaddd xmm11, xmm11, xmm11 vpaddd xmm12, xmm12, xmm12 vpaddd xmm13, xmm13, xmm13 vpaddd xmm14, xmm14, xmm14 dec rcx jnz startLabel4Code5 (same as Code1 but with nonzeroing vpsubd`s):

vzeroall mov rcx, 1000000 startLabel5: vfmadd231ps ymm0, ymm0, ymm0 vfmadd231ps ymm1, ymm1, ymm1 vfmadd231ps ymm2, ymm2, ymm2 vfmadd231ps ymm3, ymm3, ymm3 vfmadd231ps ymm4, ymm4, ymm4 vfmadd231ps ymm5, ymm5, ymm5 vfmadd231ps ymm6, ymm6, ymm6 vfmadd231ps ymm7, ymm7, ymm7 vfmadd231ps ymm8, ymm8, ymm8 vfmadd231ps ymm9, ymm9, ymm9 vpsubd ymm10, ymm10, ymm11 vpsubd ymm11, ymm11, ymm12 vpsubd ymm12, ymm12, ymm13 vpsubd ymm13, ymm13, ymm14 vpsubd ymm14, ymm14, ymm10 dec rcx jnz startLabel5Code6b: (revised, memory operands for vpaddds only)

vzeroall mov rcx, 1000000 startLabel6: vfmadd231ps ymm0, ymm0, ymm0 vfmadd231ps ymm1, ymm1, ymm1 vfmadd231ps ymm2, ymm2, ymm2 vfmadd231ps ymm3, ymm3, ymm3 vfmadd231ps ymm4, ymm4, ymm4 vfmadd231ps ymm5, ymm5, ymm5 vfmadd231ps ymm6, ymm6, ymm6 vfmadd231ps ymm7, ymm7, ymm7 vfmadd231ps ymm8, ymm8, ymm8 vfmadd231ps ymm9, ymm9, ymm9 vpaddd ymm10, ymm10, [mem] vpaddd ymm11, ymm11, [mem] vpaddd ymm12, ymm12, [mem] vpaddd ymm13, ymm13, [mem] vpaddd ymm14, ymm14, [mem] dec rcx jnz startLabel6Code7: (same as Code1 but vpaddds use ymm15)

vzeroall mov rcx, 1000000 startLabel7: vfmadd231ps ymm0, ymm0, ymm0 vfmadd231ps ymm1, ymm1, ymm1 vfmadd231ps ymm2, ymm2, ymm2 vfmadd231ps ymm3, ymm3, ymm3 vfmadd231ps ymm4, ymm4, ymm4 vfmadd231ps ymm5, ymm5, ymm5 vfmadd231ps ymm6, ymm6, ymm6 vfmadd231ps ymm7, ymm7, ymm7 vfmadd231ps ymm8, ymm8, ymm8 vfmadd231ps ymm9, ymm9, ymm9 vpaddd ymm10, ymm15, ymm15 vpaddd ymm11, ymm15, ymm15 vpaddd ymm12, ymm15, ymm15 vpaddd ymm13, ymm15, ymm15 vpaddd ymm14, ymm15, ymm15 dec rcx jnz startLabel7Code8: (same as Code7 but uses xmm instead of ymm)

vzeroall mov rcx, 1000000 startLabel8: vfmadd231ps xmm0, ymm0, ymm0 vfmadd231ps xmm1, xmm1, xmm1 vfmadd231ps xmm2, xmm2, xmm2 vfmadd231ps xmm3, xmm3, xmm3 vfmadd231ps xmm4, xmm4, xmm4 vfmadd231ps xmm5, xmm5, xmm5 vfmadd231ps xmm6, xmm6, xmm6 vfmadd231ps xmm7, xmm7, xmm7 vfmadd231ps xmm8, xmm8, xmm8 vfmadd231ps xmm9, xmm9, xmm9 vpaddd xmm10, xmm15, xmm15 vpaddd xmm11, xmm15, xmm15 vpaddd xmm12, xmm15, xmm15 vpaddd xmm13, xmm15, xmm15 vpaddd xmm14, xmm15, xmm15 dec rcx jnz startLabel8

Measured TSC clocks with Turbo and C1E disabled:

Haswell Broadwell Skylake

CPUID 306C3, 40661 306D4, 40671 506E3

Code1 ~5000000 ~7730000 ->~54% slower ~5500000 ->~10% slower

Code2 ~5000000 ~5000000 ~5000000

Code3 ~6000000 ~5000000 ~5000000

Code4 ~5000000 ~7730000 ~5500000

Code5 ~5000000 ~7730000 ~5500000

Code6b ~5000000 ~8380000 ~5500000

Code7 ~5000000 ~5000000 ~5000000

Code8 ~5000000 ~5000000 ~5000000

Can somebody explain what happens with Code1 on Broadwell?

My guess is Broadwell somehow contaminates Port1 with vpaddds in Code1 case, however Haswell is able to use Port5 only if Port0 and Port1 is full;Do you have any idea to accomplish the ~5000000 clk on Broadwell with FMA instructions?

I tried to reorder. Similar behavior experienced with double and qword;

I used Windows 8.1 and Win 10;

Update:

Added Code3 as Marat Dukhan's idea with long VEX;

Extended the result table with Skylake experiences;

Uploaded a VS2015 Community + MASM sample code here

Update2:

I tried with xmm registers instead of ymm (Code 4). Same result on Broadwell.

Update3:

I added Code5 as Peter Cordes idea (substitute vpaddd`s with other intructions (vpxor, vpor, vpand, vpandn, vpsubd)). If the new instruction not a zeroing idiom(vpxor, vpsubd with same register), the result is the same on BDW. Sample project updated with Code4 and Code5.

Update4:

I added Code6 as Stephen Canon`s idea (memory operands). The result is ~8200000 clks. Sample project updated with Code6;

I checked the CPU freq and the possible thottling with System Stability Test of AIDA64. The frequency is stable and no sign of throttling;

Intel IACA 2.1 Haswell throughput analysis:

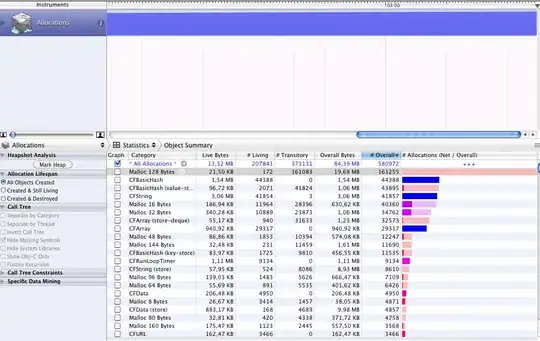

Intel(R) Architecture Code Analyzer Version - 2.1 Analyzed File - Assembly.obj Binary Format - 64Bit Architecture - HSW Analysis Type - Throughput Throughput Analysis Report -------------------------- Block Throughput: 5.10 Cycles Throughput Bottleneck: Port0, Port1, Port5 Port Binding In Cycles Per Iteration: --------------------------------------------------------------------------------------- | Port | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 | --------------------------------------------------------------------------------------- | Cycles | 5.0 0.0 | 5.0 | 0.0 0.0 | 0.0 0.0 | 0.0 | 5.0 | 1.0 | 0.0 | --------------------------------------------------------------------------------------- | Num Of | Ports pressure in cycles | | | Uops | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 | | --------------------------------------------------------------------------------- | 1 | 1.0 | | | | | | | | CP | vfmadd231ps ymm0, ymm0, ymm0 | 1 | | 1.0 | | | | | | | CP | vfmadd231ps ymm1, ymm1, ymm1 | 1 | 1.0 | | | | | | | | CP | vfmadd231ps ymm2, ymm2, ymm2 | 1 | | 1.0 | | | | | | | CP | vfmadd231ps ymm3, ymm3, ymm3 | 1 | 1.0 | | | | | | | | CP | vfmadd231ps ymm4, ymm4, ymm4 | 1 | | 1.0 | | | | | | | CP | vfmadd231ps ymm5, ymm5, ymm5 | 1 | 1.0 | | | | | | | | CP | vfmadd231ps ymm6, ymm6, ymm6 | 1 | | 1.0 | | | | | | | CP | vfmadd231ps ymm7, ymm7, ymm7 | 1 | 1.0 | | | | | | | | CP | vfmadd231ps ymm8, ymm8, ymm8 | 1 | | 1.0 | | | | | | | CP | vfmadd231ps ymm9, ymm9, ymm9 | 1 | | | | | | 1.0 | | | CP | vpaddd ymm10, ymm10, ymm10 | 1 | | | | | | 1.0 | | | CP | vpaddd ymm11, ymm11, ymm11 | 1 | | | | | | 1.0 | | | CP | vpaddd ymm12, ymm12, ymm12 | 1 | | | | | | 1.0 | | | CP | vpaddd ymm13, ymm13, ymm13 | 1 | | | | | | 1.0 | | | CP | vpaddd ymm14, ymm14, ymm14 | 1 | | | | | | | 1.0 | | | dec rcx | 0F | | | | | | | | | | jnz 0xffffffffffffffaa Total Num Of Uops: 16I followed jcomeau_ictx idea, and modified the Agner Fog`s testp.zip (published 2015-12-22) The port usage on the BDW 306D4:

Clock Core cyc Instruct uop p0 uop p1 uop p5 uop p6 Code1: 7734720 7734727 17000001 4983410 5016592 5000001 1000001 Code2: 5000072 5000072 17000001 5000010 5000014 4999978 1000002The port distribution near perfect as on the Haswell. Then I checked the resource stall counters (event 0xa2)

Clock Core cyc Instruct res.stl. RS stl. SB stl. ROB stl. Code1: 7736212 7736213 17000001 3736191 3736143 0 0 Code2: 5000068 5000072 17000001 1000050 999957 0 0It seems to me the Code1 and Code2 difference comming from the RS stall. Remark from Intel SDM: "Cycles stalled due to no eligible RS entry available."

How can I avoid this stall with FMA?

Update5:

Code6 changed, as Peter Cordes drew my attention, only vpaddds use memory operands. No effect on HSW and SKL, BDW get worse.

As Marat Dukhan measured, not just vpadd/vpsub/vpand/vpandn/vpxor affected, but other Port5 bounded instructions like vmovaps, vblendps, vpermps, vshufps, vbroadcastss;

As IwillnotexistIdonotexist suggested, I tried out with other operands. A successful modification is Code7, where all vpaddds use ymm15. This version can produce on BDWs ~5000000 clks, but just for a while. After ~6 million FMA pair it reaches the usual ~7730000 clks:

Clock Core cyc Instruct res.stl. RS stl. SB stl. ROB stl. 5133724 5110723 17000001 1107998 946376 0 0 6545476 6545482 17000001 2545453 1 0 0 6545468 6545471 17000001 2545437 90910 0 0 5000016 5000019 17000001 999992 999992 0 0 7671620 7617127 17000003 3614464 3363363 0 0 7737340 7737345 17000001 3737321 3737259 0 0 7802916 7747108 17000003 3737478 3735919 0 0 7928784 7796057 17000007 3767962 3676744 0 0 7941072 7847463 17000003 3781103 3651595 0 0 7787812 7779151 17000005 3765109 3685600 0 0 7792524 7738029 17000002 3736858 3736764 0 0 7736000 7736007 17000001 3735983 3735945 0 0I tried the xmm version of Code7 as Code8. The effect is similar, but the faster runtime sustains longer. I haven't found significant difference between a 1.6GHz i5-5250U and 3.7GHz i7-5775C.

16 and 17 was made with disabled HyperThreading. With enabled HTT the effect is less.