This question may evidently have different answers on different systems, and in this sense it is ill-posed from the start. For example an i486 has no pipeline and a pentium has no SSE.

The correct question to ask would be: " what is the fastest way to

convert a single char hex to dec in X system , e.g. i686 " .

Among approaches herein, the answer to this is actually the same or very very very nearly the same on a system with a multi-stage pipeline. Any system without a pipeline will bend towards the lookup table method (LUT), but if memory access is slow the conditional method (CEV), or the bitwise evaluation method (BEV), may profit depending of the speed of xor vs load for the given CPU.

(CEV) decomposes into 2 load effective addresses a comparison and a conditional move from registers which is not prone to mis-prediction. All these commands are pairable in the pentium pipeline. So they actually go in 1-cycle.

8d 57 d0 lea -0x30(%rdi),%edx

83 ff 39 cmp $0x39,%edi

8d 47 a9 lea -0x57(%rdi),%eax

0f 4e c2 cmovle %edx,%eax

The (LUT) decomposes into a mov between registers and mov from a data dependent memory location plus some nops for alignment, and should take the minimum of 1-cycle. As the previous there are only data dependencies.

48 63 ff movslq %edi,%rdi

8b 04 bd 00 1d 40 00 mov 0x401d00(,%rdi,4),%eax

The (BEV) is a different beast as it actually requires 2 movs + 2 xors + 1 and a conditional mov. These can also be nicely pipelined.

89 fa mov %edi,%edx

89 f8 mov %edi,%eax

83 f2 57 xor $0x57,%edx

83 f0 30 xor $0x30,%eax

83 e7 40 and $0x40,%edi

0f 45 c2 cmovne %edx,%eax

Of course, it is a very rare occasion that it is application critical (maybe Mars Pathfinder is a candidate) to convert just a signle char. Instead one would expect to convert a larger string by actually making a loop and calling that function.

Thus on such a scenario the code that is better vectorizable is the winner. The LUT does not vectorize, and BEV and CEV have better behaviour. In general such micro-optimization does not get you anywhere, write your code and let live (i.e. let the compiler run).

So I have actually built some tests in this sense that are easily reproducible on any system with a c++11 compiler and a random device source,such as any *nix system. If one does not permit vectorization -O2 CEV/LUT are almost equal, but once -O3 is set the advantage of writing code that is more decomposable shows the difference.

To summarised, if you have an old compiler use LUT, if

your system is low-end or old consider BEV, otherwise the compiler

will outsmart you and you should use CEV.

Problem: in question is to convert from the set of chars {0,1,2,3,4,5,6,7,8,9,a,b,c,d,e,f} to the set of {0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15}. There are no capital letters under consideration.

The idea is to take advantage of the linearity of the ascii table in segments.

[Simple and easy]: Conditional evaluation -> CEV

int decfromhex(int const x)

{

return x<58?x-48:x-87;

}

[Dirty and complex]: Bitwise evaluation -> BEV

int decfromhex(int const x)

{

return 9*(x&16)+( x & 0xf );

}

[compile time]: Template conditional evaluation -> TCV

template<char n> int decfromhex()

{

int constexpr x = n;

return x<58 ? x-48 : x -87;

}

[Lookup table]: Lookup table -> LUT

int decfromhex(char n)

{

static int constexpr x[255]={

// fill everything with invalid, e.g. -1 except places\

// 48-57 and 97-102 where you place 0..15

};

return x[n];

}

Among all , the last seems to be the fastest at first look. The second is only at compile time and constant expression.

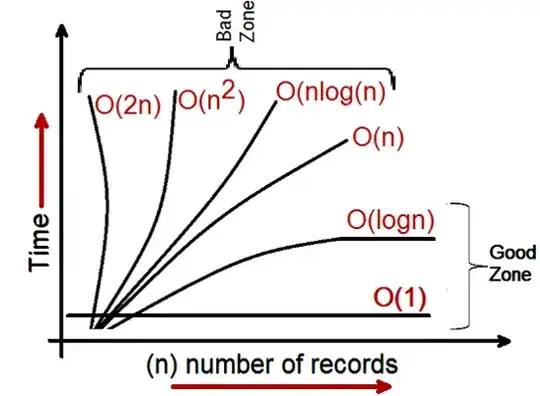

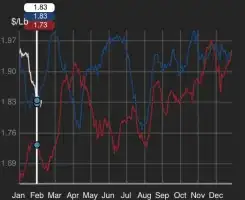

[RESULT] (Please verify): *BEV is the fastest among all and handles lower and upper case letter , but only marginal to CEV which does not handle capital letters. LUT becomes slower than both CEV and BEV as the size of the string increases.

An exemplary result for str-sizes 16-12384 can be found below ( lower is better )

The average time (100 runs ) along is show. The size of the bubble is the normal error.

The script for running the tests is available.

Tests have been performed for the conditional CEV, the bitwise BEV and the lookup table LUT on a set of randomly generated strings. The tests are fairly simple and from:

Test source code

these are verifiable:

- A local copy of the input string is placed in a local buffer every time.

- A local copy of the results is kept that is then copied to the heap for every string test

- Duration only for the time operating on the string is extracted

- uniform approach, there is no complicated machinery and wrap/around code that is fitted for other cases.

- no sampling the entire timing sequence is used

- CPU preheating is executed

- Sleep between tests occur to permit marshal the code such that one test does not take advantage of the previous test.

- Compilation is performed with

g++ -std=c++11 -O3 -march=native dectohex.cpp -o d2h

- Launch with

taskset -c 0 d2h

- No thread dependencies or multithreading

- The results are actually being used, to avoid any type of loop optimization

As a side note I have seen in practice version 3 to be much faster with older c++98 compilers.

[BOTTOM LINE]: use CEV without fear, unless you know your variables at compile time where you could use version TCV. The LUT

should only be used after significant performance per use case

evaluation, and probably with older compilers. Another case is when

your set is larger i.e. {0,1,2,3,4,5,6,7,8,9,a,b,c,d,e,f,A,B,C,D,E,F}

. This can be achieved as well. Finally if you are permormance hungry use BEV .

The results with the unordered_map have been removed since they have been just too slow to compare, or at best case may be as fast as the LUT solution.

Results from my personal pc on strings of size 12384/256 and for 100 strings:

g++ -DS=2 -DSTR_SIZE=256 -DSET_SIZE=100 -DUNITS=nanoseconds -O3 -std=c++11 -march=native dectohex.cpp -o d2h && taskset -c 0 ./d2h

sign: -2709

-------------------------------------------------------------------

(CEV) Total: 185568 nanoseconds - mean: 323.98 nanoseconds error: 88.2699 nanoseconds

(BEV) Total: 185568 nanoseconds - mean: 337.68 nanoseconds error: 113.784 nanoseconds

(LUT) Total: 229612 nanoseconds - mean: 667.89 nanoseconds error: 441.824 nanoseconds

-------------------------------------------------------------------

g++ -DS=2 -DSTR_SIZE=12384 -DSET_SIZE=100 -DUNITS=nanoseconds -O3 -std=c++11 -march=native hextodec.cpp -o d2h && taskset -c 0 ./h2d

-------------------------------------------------------------------

(CEV) Total: 5539902 nanoseconds - mean: 6229.1 nanoseconds error: 1052.45 nanoseconds

(BEV) Total: 5539902 nanoseconds - mean: 5911.64 nanoseconds error: 1547.27 nanoseconds

(LUT) Total: 6346209 nanoseconds - mean: 14384.6 nanoseconds error: 1795.71 nanoseconds

-------------------------------------------------------------------

Precision: 1 ns

The results from a system with GCC 4.9.3 compiled to the metal without the system being loaded on strings of size 256/12384 and for 100 strings

g++ -DS=2 -DSTR_SIZE=256 -DSET_SIZE=100 -DUNITS=nanoseconds -O3 -std=c++11 -march=native dectohex.cpp -o d2h && taskset -c 0 ./d2h

sign: -2882

-------------------------------------------------------------------

(CEV) Total: 237449 nanoseconds - mean: 444.17 nanoseconds error: 117.337 nanoseconds

(BEV) Total: 237449 nanoseconds - mean: 413.59 nanoseconds error: 109.973 nanoseconds

(LUT) Total: 262469 nanoseconds - mean: 731.61 nanoseconds error: 11.7507 nanoseconds

-------------------------------------------------------------------

Precision: 1 ns

g++ -DS=2 -DSTR_SIZE=12384 -DSET_SIZE=100 -DUNITS=nanoseconds -O3 -std=c++11 -march=native dectohex.cpp -o d2h && taskset -c 0 ./d2h

sign: -137532

-------------------------------------------------------------------

(CEV) Total: 6834796 nanoseconds - mean: 9138.93 nanoseconds error: 144.134 nanoseconds

(BEV) Total: 6834796 nanoseconds - mean: 8588.37 nanoseconds error: 4479.47 nanoseconds

(LUT) Total: 8395700 nanoseconds - mean: 24171.1 nanoseconds error: 1600.46 nanoseconds

-------------------------------------------------------------------

Precision: 1 ns

[HOW TO READ THE RESULTS]

The mean is shown on microseconds required to compute the string of the given size.

The total time for each test is given. The mean is computed as the sum/total of timings to compute one string ( no other code in that region but could be vectorized, and that's ok) . The error is the standard deviation of the timings.

The mean tell us what we should expect on average , and the error how much the timings have been following normality. In this case this is a fair measure of error only when it is small ( otherwise we should use something appropriate for positive distributions ). One usually should expect high errors in case of cache miss , processor scheduling, and many other factors.

The code has a unique macro defined to run the tests, permits to define compile time variables to set up the tests, and prints complete information such as:

g++ -DS=2 -DSTR_SIZE=64 -DSET_SIZE=1000 -DUNITS=nanoseconds -O3 -std=c++11 -march=native dectohex.cpp -o d2h && taskset -c 0 ./d2h

sign: -6935

-------------------------------------------------------------------

(CEV) Total: 947378 nanoseconds - mean: 300.871 nanoseconds error: 442.644 nanoseconds

(BEV) Total: 947378 nanoseconds - mean: 277.866 nanoseconds error: 43.7235 nanoseconds

(LUT) Total: 1040307 nanoseconds - mean: 375.877 nanoseconds error: 14.5706 nanoseconds

-------------------------------------------------------------------

For example to run the test with a 2sec pause on a str of size 256 for a total of 10000 different strings, output timings in double precision, and count in nanoseconds the following command compiles and runs the test.

g++ -DS=2 -DSTR_SIZE=256 -DSET_SIZE=10000 -DUTYPE=double -DUNITS=nanoseconds -O3 -std=c++11 -march=native dectohex.cpp -o d2h && taskset -c 0 ./d2h