I am reading a MongoDB collection using newAPIHadoopRDD in Java.

First I create a JavaSparkContext object using the following class:

public class SparkLauncher {

public JavaSparkContext javaSparkContext ;

public SparkLauncher()

{

javaSparkContext = null;

}

public JavaSparkContext getSparkContext() {

if (javaSparkContext == null ) {

System.out.println("SPARK INIT...");

try {

System.setProperty("spark.executor.memory", "2g");

Runtime runtime = Runtime.getRuntime();

runtime.gc();

int numOfCores = runtime.availableProcessors();

numOfCores=3;

SparkConf conf = new SparkConf();

conf.setMaster("local[" + numOfCores + "]");

conf.setAppName("WL");

conf.set("spark.serializer",

"org.apache.spark.serializer.KryoSerializer");

javaSparkContext = new JavaSparkContext(conf);

} catch (Exception ex) {

ex.printStackTrace();

}

}

return javaSparkContext;

}

public void closeSparkContext(){

javaSparkContext.stop();

javaSparkContext.close();

javaSparkContext= null;

}

}

Then, in other class I read the mongodb collection:

SparkLauncher sc = new SparkLauncher();

JavaSparkContext javaSparkContext = sc.getSparkContext();

try {

interactions = javaSparkContext.newAPIHadoopRDD(mongodbConfig,

MongoInputFormat.class, Object.class, BSONObject.class);

}

catch (Exception e) {

System.out.print(e.getMessage());

}

This code creates a lot of threads reading the collection's splits. After I close the JavaSparkContext object :

javaSparkContext.close();

sc.closeSparkContext();

System.gc();

All threads are still alive and the memory is not released. It causes kind of memory leak and thread leak. Is this because of newAPIHadoopRDD method? Is there any way to get rid of these threads ?

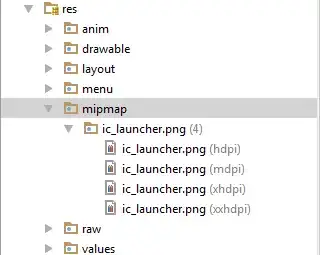

Here is a snapshot of part of threads still are alive: