In addition to Chris' generic answer, some background in iText(Sharp) content parsing...

iText(Sharp) provides a framework for content extraction in the namespace iTextSharp.text.pdf.parser / package com.itextpdf.text.pdf.parser. This franework reads the page content, keeps track of the current graphics state, and forwards information on pieces of content to the IExtRenderListener or IRenderListener / ExtRenderListener or RenderListener the user (i.e. you) provides. In particular it does not interpret structure into this information.

This render listener may be a text extraction strategy (ITextExtractionStrategy / TextExtractionStrategy), i.e. a special render listener which is predominantly designed to extract a pure text stream without formatting or layout information. And for this special case iText(Sharp) additionally provides two sample implementations, the SimpleTextExtractionStrategy and the LocationTextExtractionStrategy.

For your task you need a more sophisticated render listener which either

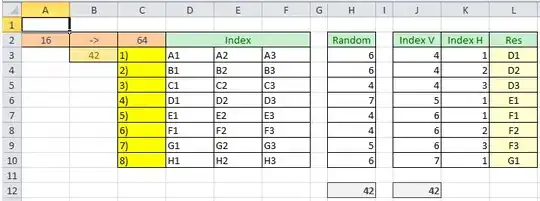

- exports the text with coordinates (Chris in one of his answers has provided an extended

LocationTextExtractionStrategy which can additionally provide positions and bounding boxes of text chunks) allowing you in additional code to analyse tabular structures; or

- does the analysis of tabular data itself.

I do not have an example for the latter variant because generically recognizing and parsing tables is a whole project in itself. You might want to look into the Tabula project for inspiration; this project is surprisingly good at the task of table extraction.

PS: If you feel more at home with trying to extract structured content from a pure string representation of the content which nonetheless tries to reflect the original layout, you might try something like what is proposed in this answer, a variant of the LocationTextExtractionStrategy working similar to the pdftotext -layout tool; only the changes to be applied to the LocationTextExtractionStrategy are shown there.

PPS: Extraction of data from very specific PDF tables may be much easier; for example have a look at this answer which demonstrates that after some PDF analysis the specific way a given table is created might give rise to a simple custom render listener for extracting the table data. This can make sense for a single PDF with a table spanning many many pages like in the case of that answer, or it can make sense if you have many PDFs identically created by the same software.

This is why I asked for a representative sample file in a comment to your question

Concerning your comments

Still with the pdf example above, both with an implementation from scratch of ITextExtractionStrategy and with extending LocationExtractionStrategy, I see that each RenderText is called at the following chunks: Fi, el, d, A, Fi, el, d... and so on. Can this be changed?

The chunks of text you get as separate RenderText calls are not separated by accident or some random decision of iText. They are the very strings drawn separately in the page content!

In your sample "Fi", "el", "d", and "A" come in different RenderText calls because the content stream contains operations in which first "Fi" is drawn, then "el", then "d", then "A".

This may sound weird at first. A common cause for such torn up words is that PDF does not use the kerning information from fonts; to apply kerning, therefore, the PDF generating software has to insert tiny forward or backward jumps between characters which should be farther from or nearer to each other than without kerning. Thus, words often are torn apart between kerning pairs.

So this cannot be changed, you will get those pieces, and it is the job of the text extraction strategy to put them together.

By the way, there are worse PDFs, some PDF generators position each and every glyph separately, foremost such generators which predominantly build GUIs but can as a feature automatically export GUI canvasses as PDFs.

I would expect that in entering the realm of "adding my own implementation" I would have control over how to determine what is a "chunk" of text.

You can... well, you have to decide which of the incoming pieces belong together and which don't. E.g. do glyphs with the same y coordinate form a single line? Or do they form separate lines in different columns which just happen to be located next to each other.

So yes, you decide which glyphs you interpret as a single word or as content of a single table cell, but your input consists of the groups of glyphs used in the actual PDF content stream.

Not only that, in none of the interface's methods I can "spot" how/where it deals with non-text data/images - so I could intercede with the spacing issue (RenderImage is not called)

RenderImage will be called for embedded bitmap images, JPEGs etc. If you want to be informed about vector graphics, your strategy will also have to implement IExtRenderListener which provides methods ModifyPath, RenderPath and ClipPath.

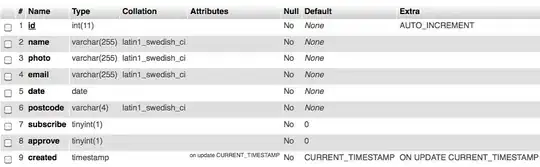

This is how the pdf data looks like.

This is how the pdf data looks like.