I'm trying to learn OCR processing. I have decided to create a .net project which use EmguCv wrapper to use OpenCV library.

I have wrote a little piece of code (most of lines come from here) :

public static Image<Bgr, byte> FindImage(string Imgtemplate, string Imgsource, float coeff)

{

Image<Bgr, byte> source = new Image<Bgr, byte>(Imgsource); // Image B

Image<Bgr, byte> template = new Image<Bgr, byte>(Imgtemplate); // Image A

Image<Bgr, byte> imageToShow = source.Copy();

using (Image<Gray, float> result = source.MatchTemplate(template, Emgu.CV.CvEnum.TemplateMatchingType.CcoeffNormed))

{

double[] minValues, maxValues;

//double minValues = 0, maxValues = 0;

Point[] minLocations, maxLocations;

//Point minLocations = new Point(), maxLocations = new Point();

//CvInvoke.Normalize(result, result, 0, 1, Emgu.CV.CvEnum.NormType.MinMax, Emgu.CV.CvEnum.DepthType.Default, new Mat());

//CvInvoke.MinMaxLoc(result, ref minValues, ref maxValues, ref minLocations, ref maxLocations, new Mat());

result.MinMax(out minValues, out maxValues, out minLocations, out maxLocations);

// You can try different values of the threshold. I guess somewhere between 0.75 and 0.95 would be good.

if (maxValues[0] > coeff)

{

// This is a match. Do something with it, for example draw a rectangle around it.

Rectangle match = new Rectangle(maxLocations[0], template.Size);

//Rectangle match = new Rectangle(maxLocations, template.Size);

imageToShow.Draw(match, new Bgr(Color.Green), 1);

}

}

// Show imageToShow in an ImageBox (here assumed to be called imageBox1)

//imageBox1.Image = imageToShow;

return imageToShow;

}

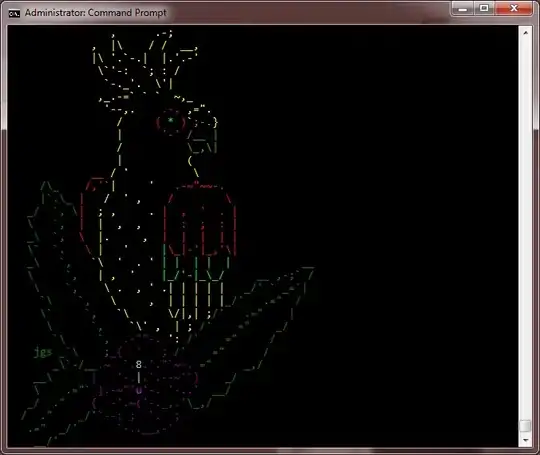

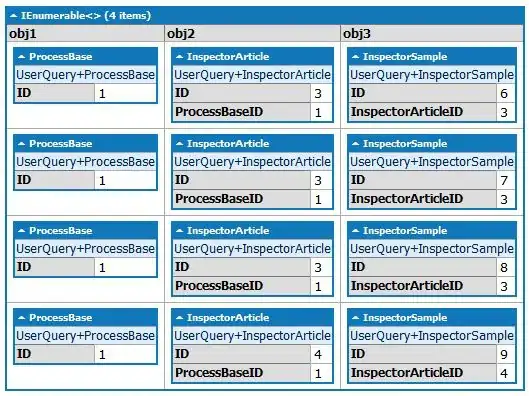

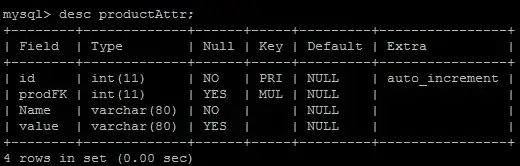

The source image is :

The template image is :

Both images are jpg. The result (with 100 % match) of the method is :

As you can see the result isn't the good one :/. However, when i run my method with the example given on OpenCV site I have a good result (67 % match) :

Anyone have an idea about my problem ? For the OpenCV example result is there any way to improve the matches percent ? Which option/parameter is the best ?