The aws-s3 documentation says:

# Copying an object

S3Object.copy 'headshot.jpg', 'headshot2.jpg', 'photos'

But how do I copy heashot.jpg from the photos bucket to the archive bucket for example

Thanks!

Deb

The aws-s3 documentation says:

# Copying an object

S3Object.copy 'headshot.jpg', 'headshot2.jpg', 'photos'

But how do I copy heashot.jpg from the photos bucket to the archive bucket for example

Thanks!

Deb

AWS-SDK gem. S3Object#copy_to

Copies data from the current object to another object in S3.

S3 handles the copy so the client does not need to fetch the

data and upload it again. You can also change the storage

class and metadata of the object when copying.

It uses copy_object method internal, so the copy functionality allows you to copy objects within or between your S3 buckets, and optionally to replace the metadata associated with the object in the process.

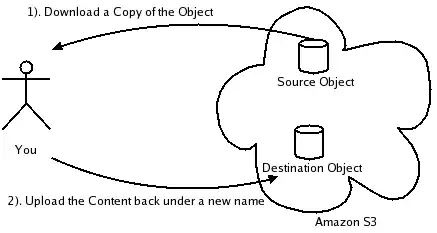

Standard method (download/upload)

Copy method

Code sample:

require 'aws-sdk'

AWS.config(

:access_key_id => '***',

:secret_access_key => '***',

:max_retries => 10

)

file = 'test_file.rb'

bucket_0 = {:name => 'bucket_from', :endpoint => 's3-eu-west-1.amazonaws.com'}

bucket_1 = {:name => 'bucket_to', :endpoint => 's3.amazonaws.com'}

s3_interface_from = AWS::S3.new(:s3_endpoint => bucket_0[:endpoint])

bucket_from = s3_interface_from.buckets[bucket_0[:name]]

bucket_from.objects[file].write(open(file))

s3_interface_to = AWS::S3.new(:s3_endpoint => bucket_1[:endpoint])

bucket_to = s3_interface_to.buckets[bucket_1[:name]]

bucket_to.objects[file].copy_from(file, {:bucket => bucket_from})

Using the right_aws gem:

# With s3 being an S3 object acquired via S3Interface.new

# Copies key1 from bucket b1 to key1_copy in bucket b2:

s3.copy('b1', 'key1', 'b2', 'key1_copy')

the gotcha I ran into is that if you have pics/1234/yourfile.jpg the bucket is only pics and the key is 1234/yourfile.jpg

I got the answer from here: How do I copy files between buckets using s3 from a rails application?

For anyone still looking, AWS has documentation for this. It's actually very simple with the aws-sdk gem:

bucket = Aws::S3::Bucket.new('source-bucket')

object = bucket.object('source-key')

object.copy_to(bucket: 'target-bucket', key: 'target-key')

When using the AWS SDK gem's copy_from or copy_to there are three things that aren't copied by default: ACL, storage class, or server side encryption. You need to specify them as options.

from_object.copy_to from_object.key, {:bucket => 'new-bucket-name', :acl => :public_read}

https://github.com/aws/aws-sdk-ruby/blob/master/lib/aws/s3/s3_object.rb#L904

Here's a simple ruby class to copy all objects from one bucket to another bucket: https://gist.github.com/edwardsharp/d501af263728eceb361ebba80d7fe324

Multiple images could easily be copied using aws-sdk gem as follows:

require 'aws-sdk'

image_names = ['one.jpg', 'two.jpg', 'three.jpg', 'four.jpg', 'five.png', 'six.jpg']

Aws.config.update({

region: "destination_region",

credentials: Aws::Credentials.new('AWS_ACCESS_KEY_ID', 'AWS_SECRET_ACCESS_KEY')

})

image_names.each do |img|

s3 = Aws::S3::Client.new()

resp = s3.copy_object({

bucket: "destinationation_bucket_name",

copy_source: URI.encode_www_form_component("/source_bucket_name/path/to/#{img}"),

key: "path/where/to/save/#{img}"

})

end

If you have too many images, it is suggested to put the copying process in a background job.

I ran into the same issue that you had, so I cloned the source code for AWS-S3 and made a branch that has a copy_to method that allows for copying between buckets, which I've been bundling into my projects and using when I need that functionality. Hopefully someone else will find this useful as well.

I believe that in order to copy between buckets you must read the file's contents from the source bucket and then write it back to the destination bucket via your application's memory space. There's a snippet showing this using aws-s3 here and another approach using right_aws here

The aws-s3 gem does not have the ability to copy files in between buckets without moving files to your local machine. If that's acceptable to you, then the following will work:

AWS::S3::S3Object.store 'dest-key', open('http://url/to/source.file'), 'dest-bucket'