Background:

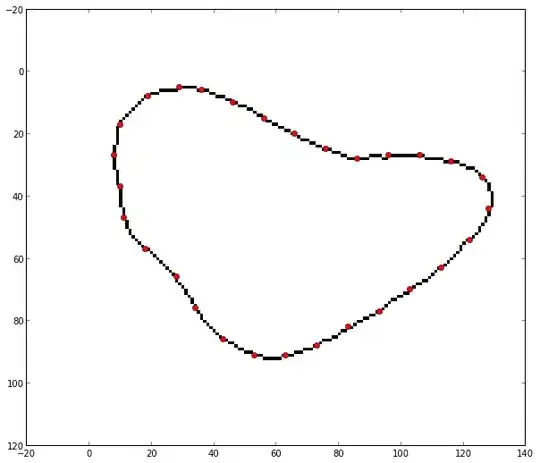

Assuming there are two shots for the same scene from two different perspective. Applying a registration algorithm on them will result in Homography Matrix that represents the relation between them. By warping one of them using this Homography Matrix will (theoretically) result in two identical images (if the non-shared area is ignored).

Since no perfection is exist, the two images may not be absolutely identical, we may find some differences between them and this differences can be shown obviously while subtracting them.

Example:

Furthermore, the lighting condition may results in huge difference while subtracting.

Problem:

I am looking for a metric that I can evaluate the accuracy of the registration process. This metric should be:

Normalized: 0->1 measurement which does not relate to the image type (natural scene, text, human...). For example, if two totally different registration process on totally different pair of photos have the same confidence, let us say 0.5, this means that the same good (or bad) registeration happened. This should applied even one of the pair is for very details-reach photos and the other of white background with "Hello" in black written.

Distinguishing between miss-registration accuracy and different lighting conditions: Although there is many way to eliminate this difference and make the two images look approximately the same, I am looking of measurement that does not count them rather than fixing them (performance issue).

One of the first thing that came in mind is to sum the absolute differences of the two images. However, this will result in a number that represent the error. This number has no meaning when you want to compare it to another registration process because another images with better registration but more details may give a bigger error rather than a smaller one.

Sorry for the long post. I am glad to provide any further information and collaborating in finding the solution.

P.S. Using OpenCV is acceptable and preferable.