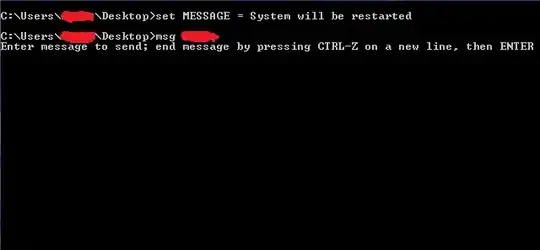

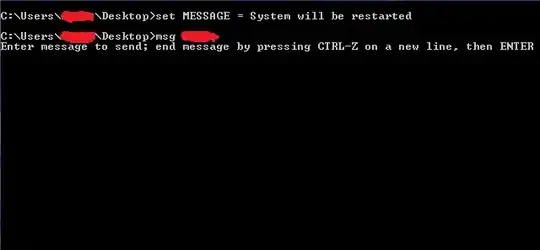

I am trying to install spark in standalone mode in Windows and when I'm trying to run the bin/spark-shell command, it gives me the following error:

I am trying to install spark in standalone mode in Windows and when I'm trying to run the bin/spark-shell command, it gives me the following error:

It appears you have downloaded the pre-built binaries for Linux and tried to run them in Windows? You could provide more detail on your setup, and please put the error in text so it's searchable, if this question lasts you want people Googling to be able to find it.

To run Spark on Windows you have to build it. There's a similar SO question here (perhaps a dupe? I can't mark it as such): How to set up Spark on Windows?

More to the point, here's the Spark docs on how to build Spark for Windows. Here's the relevant text from the section of the Overview page:

If you’d like to build Spark from source, visit Building Spark.

Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS). It’s easy to run locally on one machine — all you need is to have java installed on your system PATH, or the JAVA_HOME environment variable pointing to a Java installation.

Spark runs on Java 7+, Python 2.6+ and R 3.1+. For the Scala API, Spark 1.6.0 uses Scala 2.10. You will need to use a compatible Scala version (2.10.x).

If you are not prepared to build Spark from source code, then perhaps you want to use Virtual Box or VMWare and run a Linux VM, but that would probably only be good for testing in local[*] mode. Close to that link, "However, for local testing and unit tests, you can pass “local” to run Spark in-process."

You might get away with running a master/driver and worker/executor in a VM, but I would not expect it to play well in a network setting in a VM. Without trying it, it's hard for me to predict what specifically would go wrong, but I suspect serialization would be an issue, for starters.

Better yet get a cheap PC and install Linux and go from there.