I am concatenating data to a numpy array like this:

xdata_test = np.concatenate((xdata_test,additional_X))

This is done a thousand times. The arrays have dtype float32, and their sizes are shown below:

xdata_test.shape : (x1,40,24,24) (x1 : [500~10500])

additional_X.shape : (x2,40,24,24) (x2 : [0 ~ 500])

The problem is that when x1 is larger than ~2000-3000, the concatenation takes a lot longer.

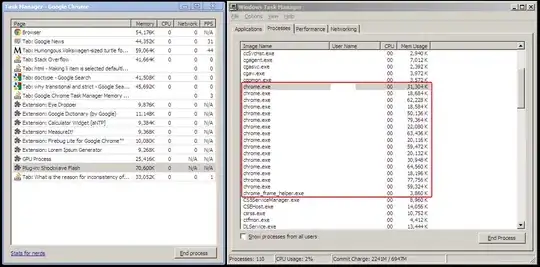

The graph below plots the concatenation time versus the size of the x2 dimension:

Is this a memory issue or a basic characteristic of numpy?