Hello I am having trouble trying to scrape data from a website for modeling purposes (fantsylabs dotcom). I'm just a hack so forgive my ignorance on comp sci lingo. What Im trying to accomplish is...

Use selenium to login to the website and navigate to the page with data.

## Initialize and load the web page url = "website url" driver = webdriver.Firefox() driver.get(url) time.sleep(3) ## Fill out forms and login to site username = driver.find_element_by_name('input') password = driver.find_element_by_name('password') username.send_keys('username') password.send_keys('password') login_attempt = driver.find_element_by_class_name("pull-right") login_attempt.click() ## Find and open the page with the data that I wish to scrape link = driver.find_element_by_partial_link_text('Player Models') link.click() time.sleep(10) ##UPDATED CODE TO TRY AND SCROLL DOWN TO LOAD ALL THE DYNAMIC DATA scroll = driver.find_element_by_class_name("ag-body-viewport") driver.execute_script("arguments[0].scrollIntoView();", scroll) ## Try to allow time for the full page to load the lazy way then pass to BeautifulSoup time.sleep(10) html2 = driver.page_source soup = BeautifulSoup(html2, "lxml", from_encoding="utf-8") div = soup.find_all('div', {'class':'ag-pinned-cols-container'}) ## continue to scrape what I want

This process works in that it logs in, navigates to the correct page but once the page finishes dynamically loading (30 seconds) pass it to beautifulsoup. I see about 300+ instances in the table that I want to scrape.... However the bs4 scraper only spits out about 30 instances of the 300. From my own research it seems that this could be an issue with the data dynamically loading via javascript and that only what is pushed to html is being parsed by bs4? (Using Python requests.get to parse html code that does not load at once)

It may be hard for anyone offering advice to reproduce my example without creating a profile on the website but would using phantomJS to initialize the browser be all that is need to "grab" all instances in order to capture all the desired data?

driver = webdriver.PhantomJS() ##instead of webdriver.Firefox()

Any thoughts or experiences will be appreciated as Ive never had to deal with dynamic pages/scraping javascript if that is what I am running into.

UPDATED AFTER Alecs Response:

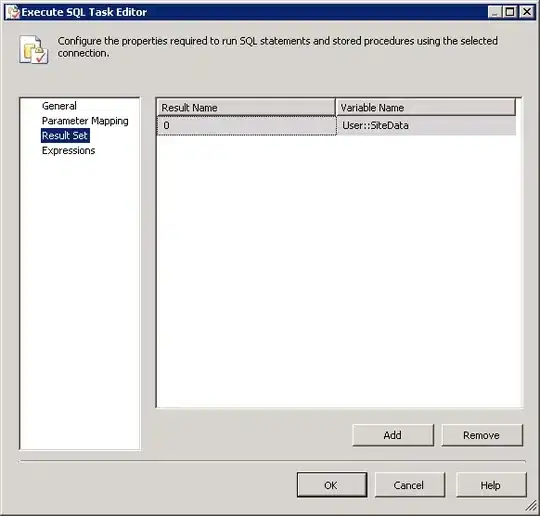

Below is a screen shot of the targeted data (highlighted in blue). You can see the scroll bar in the right of the image and that it is embedded within the page. I have also provided a view of the page source code at this container.

I have modified the original code that I provided to attempt to scroll down to the bottom and fully load the page but it fails to perform this action. When I set the driver to Firefox(), I can see that the page moves down on via the outer scroll bar but not within the targeted container. I hope this makes sense.

Thanks again for any advice/guidance.