First a note on cost optimization: BigQuery charges per column scanned, and this query will go over 72GBs. The GDELT gkg table stores its whole story in one table - we can optimize costs by creating yearly tables instead of a single one.

Now, how can we fix this query so it runs over a full year? "Resources exceeded during query execution" usually comes from non-scalable functions. For example:

RATIO_TO_REPORT(COUNT) OVER() won't scale: OVER() functions run over the whole result set, allowing us to compute totals and how much of the total each row contributes - but for this to run we need the whole result set to fit in one VM. The good news is that OVER() is able to scale when partitioning data, for example by having a OVER(PARTITION BY month) - then we would only need each partition to fit in a VM. For this query, we will remove this result column instead, for simplicity.

ORDER BY won't scale: To sort results, we need all of the results to fit on one VM too. That's why '--allow-large-results' won't allow running an ORDER BY step, as each VM will process and output results in parallel.

In this query we have an easy way to deal with ORDER BY scalability - we will move the later filter "WHERE COUNT > 50" earlier into the process. Instead of sorting all the results, and filtering the ones that had a COUNT>50, we will move it and change it to a HAVING, so it runs before the ORDER BY:

SELECT Source, Target, count

FROM (

SELECT a.name Source, b.name Target, COUNT(*) AS COUNT

FROM (FLATTEN(

SELECT

GKGRECORDID, CONCAT( STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)), '#', STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)) ) AS name

FROM [gdelt-bq:gdeltv2.gkg]

WHERE DATE>20150100000000 and DATE<20151299999999,name)) a

JOIN EACH (

SELECT

GKGRECORDID, CONCAT( STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)), '#', STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)) ) AS name

FROM [gdelt-bq:gdeltv2.gkg]

WHERE DATE>20150100000000 and DATE<20151299999999 ) b

ON a.GKGRECORDID=b.GKGRECORDID

WHERE a.name<b.name

AND a.name != '0.000000#0.000000'

AND b.name != '0.000000#0.000000'

GROUP EACH BY 1, 2

HAVING count>50

ORDER BY 3 DESC )

LIMIT 500000

And now the query runs over a full year of data!

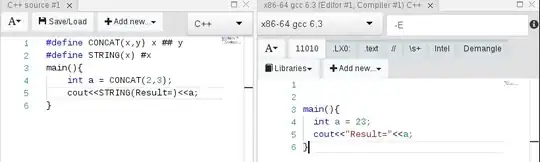

Let's look at the explanation stats:

We can see that the 188 million row table was read twice: The first subquery produced 1.5 billion rows (given the "FLATTEN"), and the second one filtered out the rows not in 2015 (note that this table started storing data in early 2015).

Stage 3 is interesting: Joining both subqueries produced 3 billion rows! Those got reduced to 500 million with the FILTER and AGGREGATE steps:

Can we do better?

Yes! Let's move the 2 WHERE a.name != '....' to an earlier "HAVING":

SELECT Source, Target, count

FROM (

SELECT a.name Source, b.name Target, COUNT(*) AS COUNT

FROM (FLATTEN(

SELECT

GKGRECORDID, CONCAT( STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)), '#', STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)) ) AS name

FROM [gdelt-bq:gdeltv2.gkg]

WHERE DATE>20150100000000 and DATE<20151299999999

HAVING name != '0.000000#0.000000',name)) a

JOIN EACH (

SELECT

GKGRECORDID, CONCAT( STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)), '#', STRING(ROUND(FLOAT(IFNULL(REGEXP_EXTRACT(SPLIT(V2Locations,';'),r'^[2-5]#.*?#.*?#.*?#.*?#.*?#(.*?)#'), '0')), 3)) ) AS name

FROM [gdelt-bq:gdeltv2.gkg]

WHERE DATE>20150100000000 and DATE<20151299999999

HAVING name != '0.000000#0.000000') b

ON a.GKGRECORDID=b.GKGRECORDID

WHERE a.name<b.name

GROUP EACH BY 1, 2

HAVING count>50

ORDER BY 3 DESC )

LIMIT 500000

This runs even faster!

Let's look at the explanation stats:

See? By moving the filtering to a step before joining, stage 3 only has to go through 1 billion rows, instead of 3 billion rows. Much faster (even for BigQuery, that as you can check by yourself, is capable of going over 3 billion rows generated by a JOIN in a short amount of time).

And what was this query for?

Look at beautiful results here: http://blog.gdeltproject.org/a-city-level-network-diagram-of-2015-in-one-line-of-sql/