I have written the following resizing algorithm which can correctly scale an image up or down. It's far too slow though due to the inner iteration through the array of weights on each loop.

I'm fairly certain I should be able to split out the algorithm into two passes much like you would with a two pass Gaussian blur which would vastly reduce the operational complexity and speed up performance. Unfortunately I can't get it to work. Would anyone be able to help?

Parallel.For(

startY,

endY,

y =>

{

if (y >= targetY && y < targetBottom)

{

Weight[] verticalValues = this.verticalWeights[y].Values;

for (int x = startX; x < endX; x++)

{

Weight[] horizontalValues = this.horizontalWeights[x].Values;

// Destination color components

Color destination = new Color();

// This is where there is too much operation complexity.

foreach (Weight yw in verticalValues)

{

int originY = yw.Index;

foreach (Weight xw in horizontalValues)

{

int originX = xw.Index;

Color sourceColor = Color.Expand(source[originX, originY]);

float weight = yw.Value * xw.Value;

destination += sourceColor * weight;

}

}

destination = Color.Compress(destination);

target[x, y] = destination;

}

}

});

Weights and indices are calculated as follows. One for each dimension:

/// <summary>

/// Computes the weights to apply at each pixel when resizing.

/// </summary>

/// <param name="destinationSize">The destination section size.</param>

/// <param name="sourceSize">The source section size.</param>

/// <returns>

/// The <see cref="T:Weights[]"/>.

/// </returns>

private Weights[] PrecomputeWeights(int destinationSize, int sourceSize)

{

IResampler sampler = this.Sampler;

float ratio = sourceSize / (float)destinationSize;

float scale = ratio;

// When shrinking, broaden the effective kernel support so that we still

// visit every source pixel.

if (scale < 1)

{

scale = 1;

}

float scaledRadius = (float)Math.Ceiling(scale * sampler.Radius);

Weights[] result = new Weights[destinationSize];

// Make the weights slices, one source for each column or row.

Parallel.For(

0,

destinationSize,

i =>

{

float center = ((i + .5f) * ratio) - 0.5f;

int start = (int)Math.Ceiling(center - scaledRadius);

if (start < 0)

{

start = 0;

}

int end = (int)Math.Floor(center + scaledRadius);

if (end > sourceSize)

{

end = sourceSize;

if (end < start)

{

end = start;

}

}

float sum = 0;

result[i] = new Weights();

List<Weight> builder = new List<Weight>();

for (int a = start; a < end; a++)

{

float w = sampler.GetValue((a - center) / scale);

if (w < 0 || w > 0)

{

sum += w;

builder.Add(new Weight(a, w));

}

}

// Normalise the values

if (sum > 0 || sum < 0)

{

builder.ForEach(w => w.Value /= sum);

}

result[i].Values = builder.ToArray();

result[i].Sum = sum;

});

return result;

}

/// <summary>

/// Represents the weight to be added to a scaled pixel.

/// </summary>

protected class Weight

{

/// <summary>

/// The pixel index.

/// </summary>

public readonly int Index;

/// <summary>

/// Initializes a new instance of the <see cref="Weight"/> class.

/// </summary>

/// <param name="index">The index.</param>

/// <param name="value">The value.</param>

public Weight(int index, float value)

{

this.Index = index;

this.Value = value;

}

/// <summary>

/// Gets or sets the result of the interpolation algorithm.

/// </summary>

public float Value { get; set; }

}

/// <summary>

/// Represents a collection of weights and their sum.

/// </summary>

protected class Weights

{

/// <summary>

/// Gets or sets the values.

/// </summary>

public Weight[] Values { get; set; }

/// <summary>

/// Gets or sets the sum.

/// </summary>

public float Sum { get; set; }

}

Each IResampler provides the appropriate series of weights based on the given index. the bicubic resampler works as follows.

/// <summary>

/// The function implements the bicubic kernel algorithm W(x) as described on

/// <see href="https://en.wikipedia.org/wiki/Bicubic_interpolation#Bicubic_convolution_algorithm">Wikipedia</see>

/// A commonly used algorithm within imageprocessing that preserves sharpness better than triangle interpolation.

/// </summary>

public class BicubicResampler : IResampler

{

/// <inheritdoc/>

public float Radius => 2;

/// <inheritdoc/>

public float GetValue(float x)

{

// The coefficient.

float a = -0.5f;

if (x < 0)

{

x = -x;

}

float result = 0;

if (x <= 1)

{

result = (((1.5f * x) - 2.5f) * x * x) + 1;

}

else if (x < 2)

{

result = (((((a * x) + 2.5f) * x) - 4) * x) + 2;

}

return result;

}

}

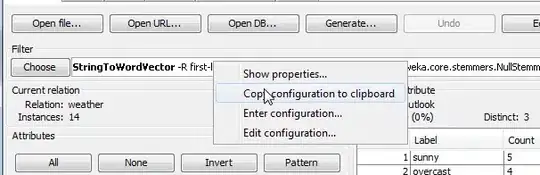

Here's an example of an image resized by the existing algorithm. The output is correct (note the silvery sheen is preserved).

Original image

Image halved in size using the bicubic resampler.

The code is part of a much larger library that I am writing to add image processing to corefx.