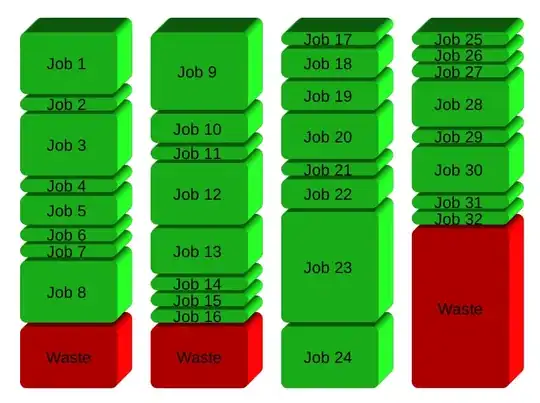

I want to distribute the work from a master server to multiple worker servers using batches.

Ideally I would have a tasks.txt file with the list of tasks to execute

cmd args 1

cmd args 2

cmd args 3

cmd args 4

cmd args 5

cmd args 6

cmd args 7

...

cmd args n

and each worker server will connect using ssh, read the file and mark each line as in progress or done

#cmd args 1 #worker1 - done

#cmd args 2 #worker2 - in progress

#cmd args 3 #worker3 - in progress

#cmd args 4 #worker1 - in progress

cmd args 5

cmd args 6

cmd args 7

...

cmd args n

I know how to make the ssh connection, read the file, and execute remotely but don't know how to make the read and write an atomic operation, in order to not have cases where 2 servers start the same task, and how to update the line.

I would like for each worker to go to the list of tasks and lock the next available task in the list rather than the server actively commanding the workers, as I will have a flexible number of workers clones that I will start or close according to how fast I will need the tasks to complete.

UPDATE:

and my ideea for the worker script would be :

#!/bin/bash

taskCmd=""

taskLine=0

masterSSH="ssh usr@masterhost"

tasksFile="/path/to/tasks.txt"

function getTask(){

while [[ $taskCmd == "" ]]

do

sleep 1;

taskCmd_and_taskLine=$($masterSSH "#read_and_lock_next_available_line $tasksFile;")

taskCmd=${taskCmd_and_taskLine[0]}

taskLine=${taskCmd_and_taskLine[1]}

done

}

function updateTask(){

message=$1

$masterSSH "#update_currentTask $tasksFile $taskLine $message;"

}

function doTask(){

return $taskCmd;

}

while [[ 1 -eq 1 ]]

do

getTask

updateTask "in progress"

doTask

taskErrCode=$?

if [[ $taskErrCode -eq 0 ]]

then

updateTask "done, finished successfully"

else

updateTask "done, error $taskErrCode"

fi

taskCmd="";

taskLine=0;

done