I encoded the wav file in base64 (audioClipName.txt in Resources/Sounds).

Then I tried to decode it, make an AudioClip from it and play it like this:

public static void CreateAudioClip()

{

string s = Resources.Load<TextAsset> ("Sounds/audioClipName").text;

byte[] bytes = System.Convert.FromBase64String (s);

float[] f = ConvertByteToFloat(bytes);

AudioClip audioClip = AudioClip.Create("testSound", f.Length, 2, 44100, false, false);

audioClip.SetData(f, 0);

AudioSource as = GameObject.FindObjectOfType<AudioSource> ();

as.PlayOneShot (audioClip);

}

private static float[] ConvertByteToFloat(byte[] array)

{

float[] floatArr = new float[array.Length / 4];

for (int i = 0; i < floatArr.Length; i++)

{

if (BitConverter.IsLittleEndian)

Array.Reverse(array, i * 4, 4);

floatArr[i] = BitConverter.ToSingle(array, i * 4);

}

return floatArr;

}

Every thing works fine, except the sound is just one noise.

I found this here on stack overflow, but the answer dosnt solve the problem.

Here are details about the wav file from Unity3D:

Does anyone know what the problem is here?

EDIT

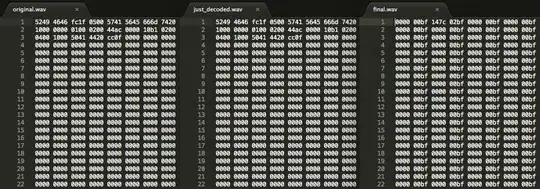

I wrote down binary files, one just after decoding from base64, second after final converting, and compared it to the original binary wav file:

As you can see, file was encoded correctly cause just decoding it and writing the file down like this:

string scat = Resources.Load<TextAsset> ("Sounds/test").text;

byte[] bcat = System.Convert.FromBase64String (scat);

System.IO.File.WriteAllBytes ("Assets/just_decoded.wav", bcat);

gave same files. All files have some length.

But the final one is wrong, so the problem is somewhere in converting to float array. But I dont understand what could be wrong.

EDIT:

Here is the code for writing down the final.wav:

string scat = Resources.Load<TextAsset> ("Sounds/test").text;

byte[] bcat = System.Convert.FromBase64String (scat);

float[] f = ConvertByteToFloat(bcat);

byte[] byteArray = new byte[f.Length * 4];

Buffer.BlockCopy(f, 0, byteArray, 0, byteArray.Length);

System.IO.File.WriteAllBytes ("Assets/final.wav", byteArray);