I am trying to get my head around threading vs. CPU usage. There are plenty of discussions about threading vs. multiprocessing (a good overview being this answer) so I decided to test this out by launching a maximum number of threads on my 8 CPU laptop running Windows 10, Python 3.4.

My assumption was that all the threads would be bound to a single CPU.

EDIT: it turns out that it was not a good assumption. I now understand that for multithreaded code, only one piece of python code can run at once (no matter where/on which core). This is different for multiprocessing code (where processes are independent and run indeed independently).

While I read about these differences, it is one answer which actually clarified this point.

I think it also explains the CPU view below: that it is an average view of many threads spread out on many CPUs, but only one of them running at one given time (which "averages" to all of them running all the time).

It is not a duplicate of the linked question (which addresses the opposite problem, i.e. all threads on one core) and I will leave it hanging in case someone has a similar question one day and is hopefully helped by my enlightenment.

The code

import threading

import time

def calc():

time.sleep(5)

while True:

a = 2356^36

n = 0

while True:

try:

n += 1

t = threading.Thread(target=calc)

t.start()

except RuntimeError:

print("max threads: {n}".format(n=n))

break

else:

print('.')

time.sleep(100000)

Led to 889 threads being started.

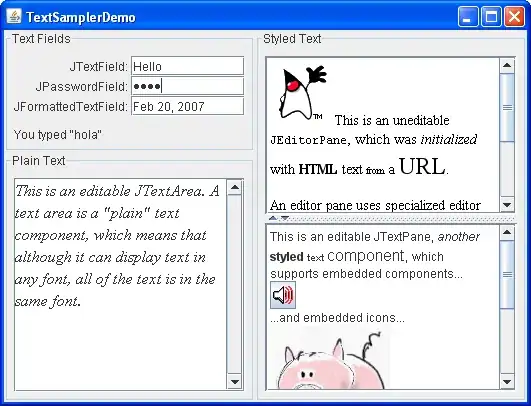

The load on the CPUs was however distributed (and surprisingly low for a pure CPU calculation, the laptop is otherwise idle with an empty load when not running my script):

Why is it so? Are the threads constantly moved as a pack between CPUs and what I see is just an average (the reality being that at a given moment all threads are on one CPU)? Or are they indeed distributed?