I'm attempting to combine an image and a video. I have them combining and exporting however it's rotated side ways.

Sorry for the bulk code paste. I've seen answers about applying a transform to compositionVideoTrack.preferredTransform however that does nothing. Adding to AVMutableVideoCompositionInstruction does nothing also.

I feel like this area is where things start to go wrong. here here:

// I feel like this loading here is the problem

let videoTrack = videoAsset.tracksWithMediaType(AVMediaTypeVideo)[0]

// because it makes our parentLayer and videoLayer sizes wrong

let videoSize = videoTrack.naturalSize

// this is returning 1920x1080, so it is rotating the video

print("\(videoSize.width) , \(videoSize.height)")

So by here our frame sizes are wrong for the rest of the method. Now when we try to go and create the overlay image layer the frame is not correct:

let aLayer = CALayer()

aLayer.contents = UIImage(named: "OverlayTestImageOverlay")?.CGImage

aLayer.frame = CGRectMake(0, 0, videoSize.width, videoSize.height)

aLayer.opacity = 1

Here is my complete method.

func combineImageVid() {

let path = NSBundle.mainBundle().pathForResource("SampleMovie", ofType:"MOV")

let fileURL = NSURL(fileURLWithPath: path!)

let videoAsset = AVURLAsset(URL: fileURL)

let mixComposition = AVMutableComposition()

let compositionVideoTrack = mixComposition.addMutableTrackWithMediaType(AVMediaTypeVideo, preferredTrackID: kCMPersistentTrackID_Invalid)

var clipVideoTrack = videoAsset.tracksWithMediaType(AVMediaTypeVideo)

do {

try compositionVideoTrack.insertTimeRange(CMTimeRangeMake(kCMTimeZero, videoAsset.duration), ofTrack: clipVideoTrack[0], atTime: kCMTimeZero)

}

catch _ {

print("failed to insertTimeRange")

}

compositionVideoTrack.preferredTransform = videoAsset.preferredTransform

// I feel like this loading here is the problem

let videoTrack = videoAsset.tracksWithMediaType(AVMediaTypeVideo)[0]

// because it makes our parentLayer and videoLayer sizes wrong

let videoSize = videoTrack.naturalSize

// this is returning 1920x1080, so it is rotating the video

print("\(videoSize.width) , \(videoSize.height)")

let aLayer = CALayer()

aLayer.contents = UIImage(named: "OverlayTestImageOverlay")?.CGImage

aLayer.frame = CGRectMake(0, 0, videoSize.width, videoSize.height)

aLayer.opacity = 1

let parentLayer = CALayer()

let videoLayer = CALayer()

parentLayer.frame = CGRectMake(0, 0, videoSize.width, videoSize.height)

videoLayer.frame = CGRectMake(0, 0, videoSize.width, videoSize.height)

parentLayer.addSublayer(videoLayer)

parentLayer.addSublayer(aLayer)

let videoComp = AVMutableVideoComposition()

videoComp.renderSize = videoSize

videoComp.frameDuration = CMTimeMake(1, 30)

videoComp.animationTool = AVVideoCompositionCoreAnimationTool(postProcessingAsVideoLayer: videoLayer, inLayer: parentLayer)

let instruction = AVMutableVideoCompositionInstruction()

instruction.timeRange = CMTimeRangeMake(kCMTimeZero, mixComposition.duration)

let mixVideoTrack = mixComposition.tracksWithMediaType(AVMediaTypeVideo)[0]

mixVideoTrack.preferredTransform = CGAffineTransformMakeRotation(CGFloat(M_PI * 90.0 / 180))

let layerInstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: mixVideoTrack)

instruction.layerInstructions = [layerInstruction]

videoComp.instructions = [instruction]

// create new file to receive data

let dirPaths = NSSearchPathForDirectoriesInDomains(.DocumentDirectory, .UserDomainMask, true)

let docsDir: AnyObject = dirPaths[0]

let movieFilePath = docsDir.stringByAppendingPathComponent("result.mov")

let movieDestinationUrl = NSURL(fileURLWithPath: movieFilePath)

do {

try NSFileManager.defaultManager().removeItemAtPath(movieFilePath)

}

catch _ {}

// use AVAssetExportSession to export video

let assetExport = AVAssetExportSession(asset: mixComposition, presetName:AVAssetExportPresetHighestQuality)

assetExport?.videoComposition = videoComp

assetExport!.outputFileType = AVFileTypeQuickTimeMovie

assetExport!.outputURL = movieDestinationUrl

assetExport!.exportAsynchronouslyWithCompletionHandler({

switch assetExport!.status{

case AVAssetExportSessionStatus.Failed:

print("failed \(assetExport!.error)")

case AVAssetExportSessionStatus.Cancelled:

print("cancelled \(assetExport!.error)")

default:

print("Movie complete")

// play video

NSOperationQueue.mainQueue().addOperationWithBlock({ () -> Void in

print(movieDestinationUrl)

})

}

})

}

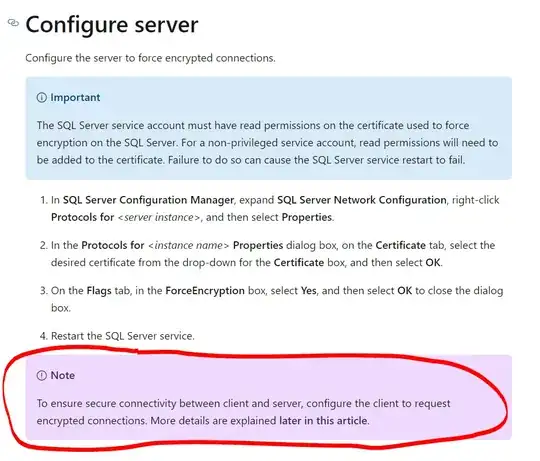

This is what I'm getting exported:

I tried adding these two methods in order to rotate the video:

class func videoCompositionInstructionForTrack(track: AVCompositionTrack, asset: AVAsset) -> AVMutableVideoCompositionLayerInstruction {

let instruction = AVMutableVideoCompositionLayerInstruction(assetTrack: track)

let assetTrack = asset.tracksWithMediaType(AVMediaTypeVideo)[0]

let transform = assetTrack.preferredTransform

let assetInfo = orientationFromTransform(transform)

var scaleToFitRatio = UIScreen.mainScreen().bounds.width / assetTrack.naturalSize.width

if assetInfo.isPortrait {

scaleToFitRatio = UIScreen.mainScreen().bounds.width / assetTrack.naturalSize.height

let scaleFactor = CGAffineTransformMakeScale(scaleToFitRatio, scaleToFitRatio)

instruction.setTransform(CGAffineTransformConcat(assetTrack.preferredTransform, scaleFactor),

atTime: kCMTimeZero)

} else {

let scaleFactor = CGAffineTransformMakeScale(scaleToFitRatio, scaleToFitRatio)

var concat = CGAffineTransformConcat(CGAffineTransformConcat(assetTrack.preferredTransform, scaleFactor), CGAffineTransformMakeTranslation(0, UIScreen.mainScreen().bounds.width / 2))

if assetInfo.orientation == .Down {

let fixUpsideDown = CGAffineTransformMakeRotation(CGFloat(M_PI))

let windowBounds = UIScreen.mainScreen().bounds

let yFix = assetTrack.naturalSize.height + windowBounds.height

let centerFix = CGAffineTransformMakeTranslation(assetTrack.naturalSize.width, yFix)

concat = CGAffineTransformConcat(CGAffineTransformConcat(fixUpsideDown, centerFix), scaleFactor)

}

instruction.setTransform(concat, atTime: kCMTimeZero)

}

return instruction

}

class func orientationFromTransform(transform: CGAffineTransform) -> (orientation: UIImageOrientation, isPortrait: Bool) {

var assetOrientation = UIImageOrientation.Up

var isPortrait = false

if transform.a == 0 && transform.b == 1.0 && transform.c == -1.0 && transform.d == 0 {

assetOrientation = .Right

isPortrait = true

} else if transform.a == 0 && transform.b == -1.0 && transform.c == 1.0 && transform.d == 0 {

assetOrientation = .Left

isPortrait = true

} else if transform.a == 1.0 && transform.b == 0 && transform.c == 0 && transform.d == 1.0 {

assetOrientation = .Up

} else if transform.a == -1.0 && transform.b == 0 && transform.c == 0 && transform.d == -1.0 {

assetOrientation = .Down

}

return (assetOrientation, isPortrait)

}

The updated my combineImageVid() method adding this in

let instruction = AVMutableVideoCompositionInstruction()

instruction.timeRange = CMTimeRangeMake(kCMTimeZero, mixComposition.duration)

let mixVideoTrack = mixComposition.tracksWithMediaType(AVMediaTypeVideo)[0]

//let layerInstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: mixVideoTrack)

//layerInstruction.setTransform(videoAsset.preferredTransform, atTime: kCMTimeZero)

let layerInstruction = videoCompositionInstructionForTrack(compositionVideoTrack, asset: videoAsset)

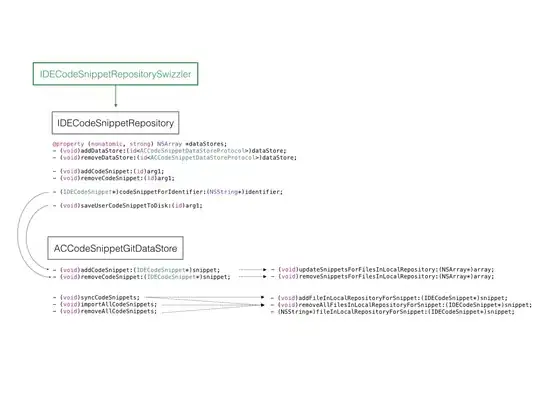

Which gives me this output:

So I'm getting closer however I feel that because the track is originally being loaded the wrong way, I need to address the issue there. Also, I don't know why the huge black box is there now. I thought maybe it was due to my image layer taking the bounds of the loaded video asset here:

aLayer.frame = CGRectMake(0, 0, videoSize.width, videoSize.height)

However changing that to some small width/height doesn't make a difference. I then thought about adding a crop rec to get rid of the black square but that didn't work either :(

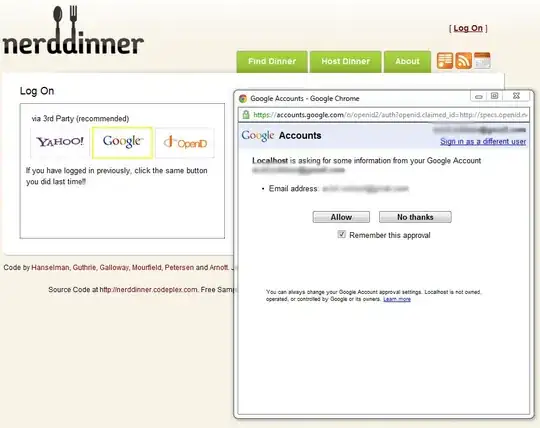

Following Allens suggestions of not using these two methods:

class func videoCompositionInstructionForTrack(track: AVCompositionTrack, asset: AVAsset) -> AVMutableVideoCompositionLayerInstruction

class func orientationFromTransform(transform: CGAffineTransform) -> (orientation: UIImageOrientation, isPortrait: Bool)

But updating my original method to look like this:

videoLayer.frame = CGRectMake(0, 0, videoSize.height, videoSize.width) //notice the switched width and height

...

videoComp.renderSize = CGSizeMake(videoSize.height,videoSize.width) //this make the final video in portrait

...

layerInstruction.setTransform(videoTrack.preferredTransform, atTime: kCMTimeZero) //important piece of information let composition know you want to rotate the original video in output

We are getting really close however the problem now seems to be editing renderSize. If I change it to anything other than the landscape size I get this: