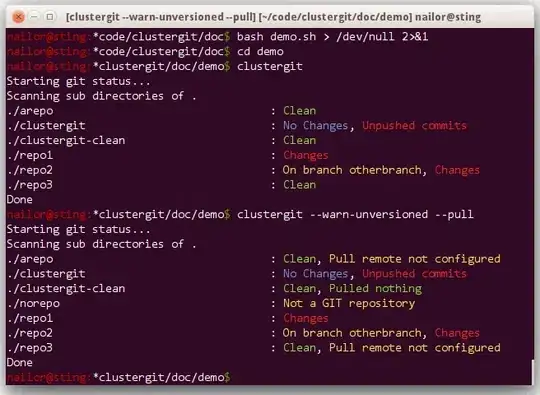

Question: Why does my CPU register ~30% when blur is applied versus ~6% when no blur is applied to an animated object?

Details:

I have a set of randomly generated items on a page that have a CSS animation assigned (in a CSS file) and randomly generated values for width, height, and importantly, blur, applied inline.

CSS file styles looks like:

animation-name: rise;

animation-fill-mode: forwards;

animation-timing-function: linear;

animation-iteration-count: 1;

-webkit-backface-visibility: hidden;

-webkit-perspective: 1000;

-webkit-transform: translate3d(0,0,0);

transform: translateZ(0);

width, height and blur are applied inline via style attribute.

<div class="foo" style="width:99px;height:99px;

filter:blur(2px);

-webkit-filter:blur(2px) opacity(0.918866247870028);

-moz-filter:blur(2px) opacity(0.918866247870028);

-o-filter:blur(2px) opacity(0.918866247870028);

-ms-filter:blur(2px) opacity(0.918866247870028);"></div>

With the blur enabled my CPU usage is ~30%. When I disable the blur, CPU usage goes down to ~6%.

What's happening here? Is chrome only able to GPU accelerate when no blur is applied? If so, why?

Update 1:

The animation rise looks as follows:

@keyframes rise {

0% {

transform: translateY(0px);

}

100% {

transform: translateY(-1000px);

}

}