Context

We are building a Flux-based web app where the client (Flux/React/TypeScript) communicates with the server (C#) via websockets (not via HTTP GET).

When a client-side action is performed, we send a command request to the server over a web socket connection.

The server always responds quickly with a first Start response (indicating if it can execute the requested action), and then subsequently responds with 1 or more Progress responses (may be several hundreds of responses, that contain progress information about the performed action). When the action on the server was completed, the last Progress response will have a progress fraction of 100% and an "OK" error status.

Note that some server actions can take just 100 ms, but others can take up to 10 minutes (hence the progress responses, so the client can show something about the action to the user).

In the client, we have:

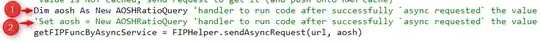

- a sendCommandRequest function which we call to send a command request to the server over the websocket

- a handler function handleCommandResponse (which is called in the websocket onMessage callback)

The question we have is: what is the best way to inject the server communication into a Flux-based application? Where should we make the send calls to the websocket and how should we move from the callback of the websocket back into the Flux chain?

Proposal

Let's say we change a visual effects parameter in the client GUI (floating point number, set in a text field box) and this triggers an action on the server that generates a new image. This action takes 1 second to be executed on the server.

Now, the way this goes is that, the GUI handler will send a Flux action to the dispatcher, which calls the callbacks of the stores that registered with it, and the stores then update their data based on the action payload, and trigger the various components via change event callbacks, which use setState with the new store data to trigger a re-render.

We could let the stores send command requests over the websocket to the server at the moment they change their data (like the new parameter value). At that point the store will have the new parameter value, while the image is still the last received image (result from a previous command).

From that moment onwards, the server starts sending back Progress responses to the client, which arrive in the websocket onMessage callback, and the final Progress response will contain the new image (2 seconds later).

In the websocket onMessage callback, we could now send a second Flux action to the dispatcher, with as payload the newly received image, and this will cause the stores to update their data with the new image, which will cause the listening components to re-render.

The problem with this is:

- the Flux store can have partially updated data: as long as the server did not send back the final result, the store will have the new parameter, but still an old image

- we need 2 Flux actions now: one used by the GUI to signal a user made some change, and one used by the web socket callback to signal some result arrived

- if something goes wrong at the server side, the new parameter will already have been set in the Flux store, while we will never receive a new image, so the data in the store becomes corrupt

Are there better ways to fit in server communication in the Flux workflow? Any advice/best practices?