Consider your dataframe a looks like below.

+----+

|col1|

+----+

| v1|

| v2|

+----+

Consider your dataframe b looks like below.

+----+

|col1|

+----+

| v2|

+----+

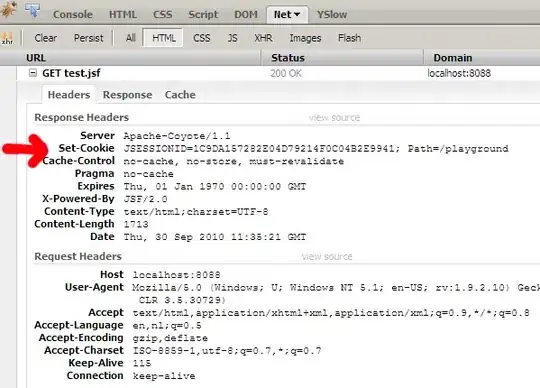

APPROACH 1:

-------------------

You can use dataframe's join method and use the type of join as left_anti to find out the values that are in dataframe a but not in dataframe b. The code is given below :

a.as('a).join(b.as('b),$"a.col1" === $"b.col1","left_anti").show()

Please find the result below :

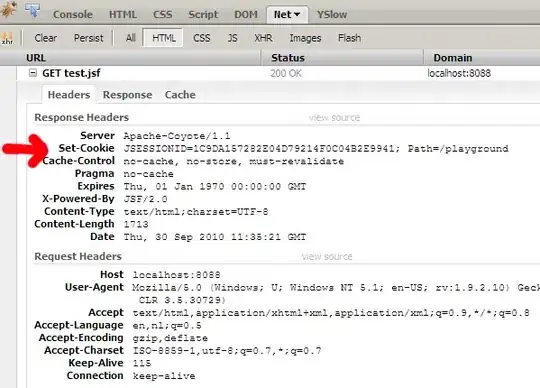

APPROACH 2:

-------------------

You can use sql which is similar to Sql server/Oracle etc to do this. For this, first you have to register your dataframe as temp table (which will reside in spark's memory) and then write the sql on top of that table.

a.registerTempTable("table_a")

b.registerTempTable("table_b")

spark.sql("select * from table_a a where not exists(select 1 from table_b b where a.col1=b.col1)").show()

Please find the result below :