I have a question about Entity Framework query execution performance.

Schema:

I have a table structure like this:

CREATE TABLE [dbo].[DataLogger]

(

[ID] [bigint] IDENTITY(1,1) NOT NULL,

[ProjectID] [bigint] NULL,

CONSTRAINT [PrimaryKey1] PRIMARY KEY CLUSTERED ( [ID] ASC )

)

CREATE TABLE [dbo].[DCDistributionBox]

(

[ID] [bigint] IDENTITY(1,1) NOT NULL,

[DataLoggerID] [bigint] NOT NULL,

CONSTRAINT [PrimaryKey2] PRIMARY KEY CLUSTERED ( [ID] ASC )

)

ALTER TABLE [dbo].[DCDistributionBox]

ADD CONSTRAINT [FK_DCDistributionBox_DataLogger]

FOREIGN KEY([DataLoggerID]) REFERENCES [dbo].[DataLogger] ([ID])

CREATE TABLE [dbo].[DCString]

(

[ID] [bigint] IDENTITY(1,1) NOT NULL,

[DCDistributionBoxID] [bigint] NOT NULL,

[CurrentMPP] [decimal](18, 2) NULL,

CONSTRAINT [PrimaryKey3] PRIMARY KEY CLUSTERED ( [ID] ASC )

)

ALTER TABLE [dbo].[DCString]

ADD CONSTRAINT [FK_DCString_DCDistributionBox]

FOREIGN KEY([DCDistributionBoxID]) REFERENCES [dbo].[DCDistributionBox] ([ID])

CREATE TABLE [dbo].[StringData]

(

[DCStringID] [bigint] NOT NULL,

[TimeStamp] [datetime] NOT NULL,

[DCCurrent] [decimal](18, 2) NULL,

CONSTRAINT [PrimaryKey4] PRIMARY KEY CLUSTERED ( [TimeStamp] DESC, [DCStringID] ASC)

)

CREATE NONCLUSTERED INDEX [TimeStamp_DCCurrent-NonClusteredIndex]

ON [dbo].[StringData] ([DCStringID] ASC, [TimeStamp] ASC)

INCLUDE ([DCCurrent])

Standard indexes on the foreign keys also exist (I don't want to list them all for space reasons).

The [StringData] table as has following storage stats:

- Data space: 26,901.86 MB

- Row count: 131,827,749

- Partitioned: true

- Partition count: 62

Usage:

I now want to group the data in the [StringData] table and do some aggregation.

I created an Entity Framework query (detailed infos to the query can be found here):

var compareData = model.StringDatas

.AsNoTracking()

.Where(p => p.DCString.DCDistributionBox.DataLogger.ProjectID == projectID && p.TimeStamp >= fromDate && p.TimeStamp < tillDate)

.Select(d => new

{

TimeStamp = d.TimeStamp,

DCCurrentMpp = d.DCCurrent / d.DCString.CurrentMPP

})

.GroupBy(d => DbFunctions.AddMinutes(DateTime.MinValue, DbFunctions.DiffMinutes(DateTime.MinValue, d.TimeStamp) / minuteInterval * minuteInterval))

.Select(d => new

{

TimeStamp = d.Key,

DCCurrentMppMin = d.Min(v => v.DCCurrentMpp),

DCCurrentMppMax = d.Max(v => v.DCCurrentMpp),

DCCurrentMppAvg = d.Average(v => v.DCCurrentMpp),

DCCurrentMppStDev = DbFunctions.StandardDeviationP(d.Select(v => v.DCCurrentMpp))

})

.ToList();

The excecution timespan is exceptional long!?

- Execution result: 92rows

- Execution time: ~16000ms

Attempts:

I now took a look into the Entity Framework generated SQL query and looks like this:

DECLARE @p__linq__4 DATETIME = 0;

DECLARE @p__linq__3 DATETIME = 0;

DECLARE @p__linq__5 INT = 15;

DECLARE @p__linq__6 INT = 15;

DECLARE @p__linq__0 BIGINT = 20827;

DECLARE @p__linq__1 DATETIME = '06.02.2016 00:00:00';

DECLARE @p__linq__2 DATETIME = '07.02.2016 00:00:00';

SELECT

1 AS [C1],

[GroupBy1].[K1] AS [C2],

[GroupBy1].[A1] AS [C3],

[GroupBy1].[A2] AS [C4],

[GroupBy1].[A3] AS [C5],

[GroupBy1].[A4] AS [C6]

FROM ( SELECT

[Project1].[K1] AS [K1],

MIN([Project1].[A1]) AS [A1],

MAX([Project1].[A2]) AS [A2],

AVG([Project1].[A3]) AS [A3],

STDEVP([Project1].[A4]) AS [A4]

FROM ( SELECT

DATEADD (minute, ((DATEDIFF (minute, @p__linq__4, [Project1].[TimeStamp])) / @p__linq__5) * @p__linq__6, @p__linq__3) AS [K1],

[Project1].[C1] AS [A1],

[Project1].[C1] AS [A2],

[Project1].[C1] AS [A3],

[Project1].[C1] AS [A4]

FROM ( SELECT

[Extent1].[TimeStamp] AS [TimeStamp],

[Extent1].[DCCurrent] / [Extent2].[CurrentMPP] AS [C1]

FROM [dbo].[StringData] AS [Extent1]

INNER JOIN [dbo].[DCString] AS [Extent2] ON [Extent1].[DCStringID] = [Extent2].[ID]

INNER JOIN [dbo].[DCDistributionBox] AS [Extent3] ON [Extent2].[DCDistributionBoxID] = [Extent3].[ID]

INNER JOIN [dbo].[DataLogger] AS [Extent4] ON [Extent3].[DataLoggerID] = [Extent4].[ID]

WHERE (([Extent4].[ProjectID] = @p__linq__0) OR (([Extent4].[ProjectID] IS NULL) AND (@p__linq__0 IS NULL))) AND ([Extent1].[TimeStamp] >= @p__linq__1) AND ([Extent1].[TimeStamp] < @p__linq__2)

) AS [Project1]

) AS [Project1]

GROUP BY [K1]

) AS [GroupBy1]

I copied this SQL query into SSMS on the same machine, connected with same connection string as the Entity Framework.

The result is a very much improved performance:

- Execution result: 92rows

- Execution time: 517ms

I also do some loop runing test and the result is strange. The test looks like this

for (int i = 0; i < 50; i++)

{

DateTime begin = DateTime.UtcNow;

[...query...]

TimeSpan excecutionTimeSpan = DateTime.UtcNow - begin;

Debug.WriteLine("{0}th run: {1}", i, excecutionTimeSpan.ToString());

}

The result is very different and looks random(?):

0th run: 00:00:11.0618580

1th run: 00:00:11.3339467

2th run: 00:00:10.0000676

3th run: 00:00:10.1508140

4th run: 00:00:09.2041939

5th run: 00:00:07.6710321

6th run: 00:00:10.3386312

7th run: 00:00:17.3422765

8th run: 00:00:13.8620557

9th run: 00:00:14.9041528

10th run: 00:00:12.7772906

11th run: 00:00:17.0170235

12th run: 00:00:14.7773750

Question:

Why is Entity Framework query execution so slow? The resulting row count is really low and the raw SQL query shows a very fast performance.

Update 1:

I take care that its not a MetaContext or Model creation delay. Some other queries are executed on the same Model instance right before with good performance.

Update 2 (related to the answer of @x0007me):

Thanks for the hint but this can be eliminated by changing the model settings like this:

modelContext.Configuration.UseDatabaseNullSemantics = true;

The EF generated SQL is now:

SELECT

1 AS [C1],

[GroupBy1].[K1] AS [C2],

[GroupBy1].[A1] AS [C3],

[GroupBy1].[A2] AS [C4],

[GroupBy1].[A3] AS [C5],

[GroupBy1].[A4] AS [C6]

FROM ( SELECT

[Project1].[K1] AS [K1],

MIN([Project1].[A1]) AS [A1],

MAX([Project1].[A2]) AS [A2],

AVG([Project1].[A3]) AS [A3],

STDEVP([Project1].[A4]) AS [A4]

FROM ( SELECT

DATEADD (minute, ((DATEDIFF (minute, @p__linq__4, [Project1].[TimeStamp])) / @p__linq__5) * @p__linq__6, @p__linq__3) AS [K1],

[Project1].[C1] AS [A1],

[Project1].[C1] AS [A2],

[Project1].[C1] AS [A3],

[Project1].[C1] AS [A4]

FROM ( SELECT

[Extent1].[TimeStamp] AS [TimeStamp],

[Extent1].[DCCurrent] / [Extent2].[CurrentMPP] AS [C1]

FROM [dbo].[StringData] AS [Extent1]

INNER JOIN [dbo].[DCString] AS [Extent2] ON [Extent1].[DCStringID] = [Extent2].[ID]

INNER JOIN [dbo].[DCDistributionBox] AS [Extent3] ON [Extent2].[DCDistributionBoxID] = [Extent3].[ID]

INNER JOIN [dbo].[DataLogger] AS [Extent4] ON [Extent3].[DataLoggerID] = [Extent4].[ID]

WHERE ([Extent4].[ProjectID] = @p__linq__0) AND ([Extent1].[TimeStamp] >= @p__linq__1) AND ([Extent1].[TimeStamp] < @p__linq__2)

) AS [Project1]

) AS [Project1]

GROUP BY [K1]

) AS [GroupBy1]

So you can see the problem you described is now solved, but the execution time does not change.

Also, as you can see in the schema and the raw execution time, I used optimized structure with high optimized indexer.

Update 3 (related to the answer of @Vladimir Baranov):

I don't see why this can be related to query plan caching. Because in the MSDN is clearly descripted that the EF6 make use of query plan caching.

A simple test proof that the huge excecution time differenz is not related to the query plan caching (phseudo code):

using(var modelContext = new ModelContext())

{

modelContext.Query(); //1th run activates caching

modelContext.Query(); //2th used cached plan

}

As the result, both queries run with the same excecution time.

Update 4 (related to the answer of @bubi):

I tried to run the query that is generated by the EF as you descripted it:

int result = model.Database.ExecuteSqlCommand(@"SELECT

1 AS [C1],

[GroupBy1].[K1] AS [C2],

[GroupBy1].[A1] AS [C3],

[GroupBy1].[A2] AS [C4],

[GroupBy1].[A3] AS [C5],

[GroupBy1].[A4] AS [C6]

FROM ( SELECT

[Project1].[K1] AS [K1],

MIN([Project1].[A1]) AS [A1],

MAX([Project1].[A2]) AS [A2],

AVG([Project1].[A3]) AS [A3],

STDEVP([Project1].[A4]) AS [A4]

FROM ( SELECT

DATEADD (minute, ((DATEDIFF (minute, 0, [Project1].[TimeStamp])) / @p__linq__5) * @p__linq__6, 0) AS [K1],

[Project1].[C1] AS [A1],

[Project1].[C1] AS [A2],

[Project1].[C1] AS [A3],

[Project1].[C1] AS [A4]

FROM ( SELECT

[Extent1].[TimeStamp] AS [TimeStamp],

[Extent1].[DCCurrent] / [Extent2].[CurrentMPP] AS [C1]

FROM [dbo].[StringData] AS [Extent1]

INNER JOIN [dbo].[DCString] AS [Extent2] ON [Extent1].[DCStringID] = [Extent2].[ID]

INNER JOIN [dbo].[DCDistributionBox] AS [Extent3] ON [Extent2].[DCDistributionBoxID] = [Extent3].[ID]

INNER JOIN [dbo].[DataLogger] AS [Extent4] ON [Extent3].[DataLoggerID] = [Extent4].[ID]

WHERE ([Extent4].[ProjectID] = @p__linq__0) AND ([Extent1].[TimeStamp] >= @p__linq__1) AND ([Extent1].[TimeStamp] < @p__linq__2)

) AS [Project1]

) AS [Project1]

GROUP BY [K1]

) AS [GroupBy1]",

new SqlParameter("p__linq__0", 20827),

new SqlParameter("p__linq__1", fromDate),

new SqlParameter("p__linq__2", tillDate),

new SqlParameter("p__linq__5", 15),

new SqlParameter("p__linq__6", 15));

- Execution result: 92

- Execution time: ~16000ms

It took exact as long as the normal EF query!?

Update 5 (related to the answer of @vittore):

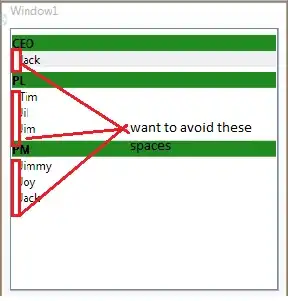

I create a traced call tree, maybe it helps:

Update 6 (related to the answer of @usr):

I created two showplan XML via SQL Server Profiler.

Update 7 (related to the comments of @VladimirBaranov):

I now run some more test case related to your comments.

First I eleminate time taking order operations by using a new computed column and a matching INDEXER. This reduce the perfomance lag related to DATEADD(MINUTE, DATEDIFF(MINUTE, 0, [TimeStamp] ) / 15* 15, 0). Detail for how and why you can find here.

The Result look s like this:

Pure EntityFramework query:

for (int i = 0; i < 3; i++)

{

DateTime begin = DateTime.UtcNow;

var result = model.StringDatas

.AsNoTracking()

.Where(p => p.DCString.DCDistributionBox.DataLogger.ProjectID == projectID && p.TimeStamp15Minutes >= fromDate && p.TimeStamp15Minutes < tillDate)

.Select(d => new

{

TimeStamp = d.TimeStamp15Minutes,

DCCurrentMpp = d.DCCurrent / d.DCString.CurrentMPP

})

.GroupBy(d => d.TimeStamp)

.Select(d => new

{

TimeStamp = d.Key,

DCCurrentMppMin = d.Min(v => v.DCCurrentMpp),

DCCurrentMppMax = d.Max(v => v.DCCurrentMpp),

DCCurrentMppAvg = d.Average(v => v.DCCurrentMpp),

DCCurrentMppStDev = DbFunctions.StandardDeviationP(d.Select(v => v.DCCurrentMpp))

})

.ToList();

TimeSpan excecutionTimeSpan = DateTime.UtcNow - begin;

Debug.WriteLine("{0}th run pure EF: {1}", i, excecutionTimeSpan.ToString());

}

0th run pure EF: 00:00:12.6460624

1th run pure EF: 00:00:11.0258393

2th run pure EF: 00:00:08.4171044

I now used the EF generated SQL as a SQL query:

for (int i = 0; i < 3; i++)

{

DateTime begin = DateTime.UtcNow;

int result = model.Database.ExecuteSqlCommand(@"SELECT

1 AS [C1],

[GroupBy1].[K1] AS [TimeStamp15Minutes],

[GroupBy1].[A1] AS [C2],

[GroupBy1].[A2] AS [C3],

[GroupBy1].[A3] AS [C4],

[GroupBy1].[A4] AS [C5]

FROM ( SELECT

[Project1].[TimeStamp15Minutes] AS [K1],

MIN([Project1].[C1]) AS [A1],

MAX([Project1].[C1]) AS [A2],

AVG([Project1].[C1]) AS [A3],

STDEVP([Project1].[C1]) AS [A4]

FROM ( SELECT

[Extent1].[TimeStamp15Minutes] AS [TimeStamp15Minutes],

[Extent1].[DCCurrent] / [Extent2].[CurrentMPP] AS [C1]

FROM [dbo].[StringData] AS [Extent1]

INNER JOIN [dbo].[DCString] AS [Extent2] ON [Extent1].[DCStringID] = [Extent2].[ID]

INNER JOIN [dbo].[DCDistributionBox] AS [Extent3] ON [Extent2].[DCDistributionBoxID] = [Extent3].[ID]

INNER JOIN [dbo].[DataLogger] AS [Extent4] ON [Extent3].[DataLoggerID] = [Extent4].[ID]

WHERE ([Extent4].[ProjectID] = @p__linq__0) AND ([Extent1].[TimeStamp15Minutes] >= @p__linq__1) AND ([Extent1].[TimeStamp15Minutes] < @p__linq__2)

) AS [Project1]

GROUP BY [Project1].[TimeStamp15Minutes]

) AS [GroupBy1];",

new SqlParameter("p__linq__0", 20827),

new SqlParameter("p__linq__1", fromDate),

new SqlParameter("p__linq__2", tillDate));

TimeSpan excecutionTimeSpan = DateTime.UtcNow - begin;

Debug.WriteLine("{0}th run: {1}", i, excecutionTimeSpan.ToString());

}

0th run: 00:00:00.8381200

1th run: 00:00:00.6920736

2th run: 00:00:00.7081006

and with OPTION(RECOMPILE):

for (int i = 0; i < 3; i++)

{

DateTime begin = DateTime.UtcNow;

int result = model.Database.ExecuteSqlCommand(@"SELECT

1 AS [C1],

[GroupBy1].[K1] AS [TimeStamp15Minutes],

[GroupBy1].[A1] AS [C2],

[GroupBy1].[A2] AS [C3],

[GroupBy1].[A3] AS [C4],

[GroupBy1].[A4] AS [C5]

FROM ( SELECT

[Project1].[TimeStamp15Minutes] AS [K1],

MIN([Project1].[C1]) AS [A1],

MAX([Project1].[C1]) AS [A2],

AVG([Project1].[C1]) AS [A3],

STDEVP([Project1].[C1]) AS [A4]

FROM ( SELECT

[Extent1].[TimeStamp15Minutes] AS [TimeStamp15Minutes],

[Extent1].[DCCurrent] / [Extent2].[CurrentMPP] AS [C1]

FROM [dbo].[StringData] AS [Extent1]

INNER JOIN [dbo].[DCString] AS [Extent2] ON [Extent1].[DCStringID] = [Extent2].[ID]

INNER JOIN [dbo].[DCDistributionBox] AS [Extent3] ON [Extent2].[DCDistributionBoxID] = [Extent3].[ID]

INNER JOIN [dbo].[DataLogger] AS [Extent4] ON [Extent3].[DataLoggerID] = [Extent4].[ID]

WHERE ([Extent4].[ProjectID] = @p__linq__0) AND ([Extent1].[TimeStamp15Minutes] >= @p__linq__1) AND ([Extent1].[TimeStamp15Minutes] < @p__linq__2)

) AS [Project1]

GROUP BY [Project1].[TimeStamp15Minutes]

) AS [GroupBy1]

OPTION(RECOMPILE);",

new SqlParameter("p__linq__0", 20827),

new SqlParameter("p__linq__1", fromDate),

new SqlParameter("p__linq__2", tillDate));

TimeSpan excecutionTimeSpan = DateTime.UtcNow - begin;

Debug.WriteLine("{0}th run: {1}", i, excecutionTimeSpan.ToString());

}

0th run with RECOMPILE: 00:00:00.8260932

1th run with RECOMPILE: 00:00:00.9139730

2th run with RECOMPILE: 00:00:01.0680665

Same SQL query excecuted in SSMS (without RECOMPILE):

00:00:01.105

Same SQL query excecuted in SSMS (with RECOMPILE):

00:00:00.902

I hope this are all values you needed.