A recent question, whether compilers are allowed to replace floating-point division with floating-point multiplication, inspired me to ask this question.

Under the stringent requirement, that the results after code transformation shall be bit-wise identical to the actual division operation,

it is trivial to see that for binary IEEE-754 arithmetic, this is possible for divisors that are a power of two. As long as the reciprocal

of the divisor is representable, multiplying by the reciprocal of the divisor delivers results identical to the division. For example, multiplication by 0.5 can replace division by 2.0.

One then wonders for what other divisors such replacements work, assuming we allow any short instruction sequence that replaces division but runs significantly faster, while delivering bit-identical results. In particular allow fused multiply-add operations in addition to plain multiplication. In comments I pointed to the following relevant paper:

Nicolas Brisebarre, Jean-Michel Muller, and Saurabh Kumar Raina. Accelerating correctly rounded floating-point division when the divisor is known in advance. IEEE Transactions on Computers, Vol. 53, No. 8, August 2004, pp. 1069-1072.

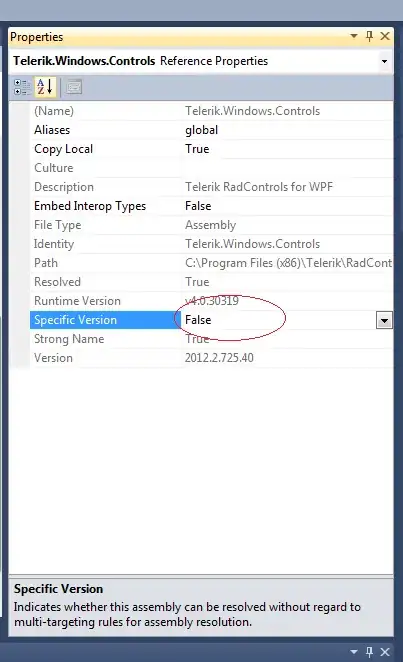

The technique advocated by the authors of the paper precomputes the reciprocal of the divisor y as a normalized head-tail pair zh:zl as follows: zh = 1 / y, zl = fma (-y, zh, 1) / y. Later, the division q = x / y is then computed as q = fma (zh, x, zl * x). The paper derives various conditions that divisor y must satisfy for this algorithm to work. As one readily observes, this algorithm has problems with infinities and zero when the signs of head and tail differ. More importantly, it will fail to deliver correct results for dividends x that are very small in magnitude, because computation of the quotient tail, zl * x, suffers from underflow.

The paper also makes a passing reference to an alternative FMA-based division algorithm, pioneered by Peter Markstein when he was at IBM. The relevant reference is:

P. W. Markstein. Computation of elementary functions on the IBM RISC System/6000 processor. IBM Journal of Research & Development, Vol. 34, No. 1, January 1990, pp. 111-119

In Markstein's algorithm, one first computes a reciprocal rc, from which an initial quotient q = x * rc is formed. Then, the remainder of the division is computed accurately with an FMA as r = fma (-y, q, x), and an improved, more accurate quotient is finally computed as q = fma (r, rc, q).

This algorithm also has issues for x that are zeroes or infinities (easily worked around with appropriate conditional execution), but exhaustive testing using IEEE-754 single-precision float data shows that it delivers the correct quotient across all possibe dividends x for many divisors y, among these many small integers. This C code implements it:

/* precompute reciprocal */

rc = 1.0f / y;

/* compute quotient q=x/y */

q = x * rc;

if ((x != 0) && (!isinf(x))) {

r = fmaf (-y, q, x);

q = fmaf (r, rc, q);

}

On most processor architectures, this should translate into a branchless sequence of instructions, using either predication, conditional moves, or select-type instructions. To give a concrete example: For division by 3.0f, the nvcc compiler of CUDA 7.5 generates the following machine code for a Kepler-class GPU:

LDG.E R5, [R2]; // load x

FSETP.NEU.AND P0, PT, |R5|, +INF , PT; // pred0 = fabsf(x) != INF

FMUL32I R2, R5, 0.3333333432674408; // q = x * (1.0f/3.0f)

FSETP.NEU.AND P0, PT, R5, RZ, P0; // pred0 = (x != 0.0f) && (fabsf(x) != INF)

FMA R5, R2, -3, R5; // r = fmaf (q, -3.0f, x);

MOV R4, R2 // q

@P0 FFMA R4, R5, c[0x2][0x0], R2; // if (pred0) q = fmaf (r, (1.0f/3.0f), q)

ST.E [R6], R4; // store q

For my experiments, I wrote the tiny C test program shown below that steps through integer divisors in increasing order and for each of them exhaustively tests the above code sequence against the proper division. It prints a list of the divisors that passed this exhaustive test. Partial output looks as follows:

PASS: 1, 2, 3, 4, 5, 7, 8, 9, 11, 13, 15, 16, 17, 19, 21, 23, 25, 27, 29, 31, 32, 33, 35, 37, 39, 41, 43, 45, 47, 49, 51, 53, 55, 57, 59, 61, 63, 64, 65, 67, 69,

To incorporate the replacement algorithm into a compiler as an optimization, a whitelist of divisors to which the above code transformation can safely be applied is impractical. The output of the program so far (at a rate of about one result per minute) suggests that the fast code works correctly across all possible encodings of x for those divisors y that are odd integers or are powers of two. Anecdotal evidence, not a proof, of course.

What set of mathematical conditions can determine a-priori whether the transformation of division into the above code sequence is safe? Answers can assume that all the floating-point operations are performed in the default rounding mode of "round to nearest or even".

#include <stdlib.h>

#include <stdio.h>

#include <math.h>

int main (void)

{

float r, q, x, y, rc;

volatile union {

float f;

unsigned int i;

} arg, res, ref;

int err;

y = 1.0f;

printf ("PASS: ");

while (1) {

/* precompute reciprocal */

rc = 1.0f / y;

arg.i = 0x80000000;

err = 0;

do {

/* do the division, fast */

x = arg.f;

q = x * rc;

if ((x != 0) && (!isinf(x))) {

r = fmaf (-y, q, x);

q = fmaf (r, rc, q);

}

res.f = q;

/* compute the reference, slowly */

ref.f = x / y;

if (res.i != ref.i) {

err = 1;

break;

}

arg.i--;

} while (arg.i != 0x80000000);

if (!err) printf ("%g, ", y);

y += 1.0f;

}

return EXIT_SUCCESS;

}