The number 0.5 was not "arrived at" at all; the author just took an arbitrary number for the purpose of illustration.

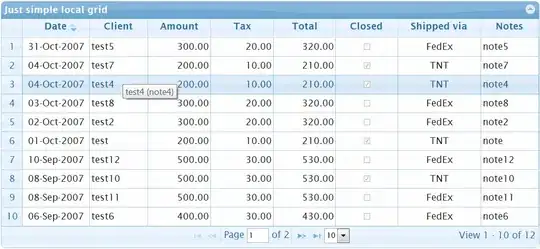

Any n-gram language model consists of two things: vocabulary and transition probabilities. And the model "does not care" how these probabilities were derived. The only requirement is that the probabilities are self-consistent (that is, for any prefix, the probabilities of all possible continuations sum up to 1). For the model above, it is true: e.g. p(runs|the, dog) + p(STOP|the,dog)=1.

Of course, in practical applications, we are indeed interested how to "learn" the model parameters from some text corpus. You can calculate that your particular language model can generate the following texts:

the # with 0.5 probability

the dog # with 0.25 probability

the dog runs # with 0.25 probability

From this observation, we can "reverse-engineer" the training corpus: it might have consisted of 4 sentences:

the

the

the dog

the dog runs

If you count all the trigrams in this corpus and normalize the counts, you see that the resulting relative frequencies are equal to the probabilities from your screenshot. In particular, there is 1 sentence which ends after "the dog", and 1 sentence in which "the dog" is followed by "runs". That's how the probability 0.5 (=1/(1+1)) could have emerged.