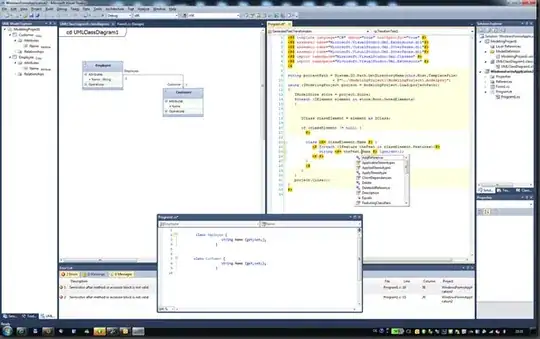

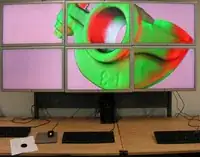

I'm having some trouble with Weka's XMeans clusterer. I've talked to a couple of other humans and we all agree that there are six clusters in the screenshot below, or at least a minimum of two if you squint really hard. Either way, xMeans does not seem to agree.

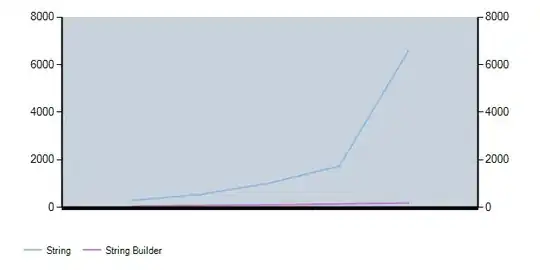

XMeans seems to systematically underestimate the number of clusters, based on whatever I have the minimum cluster count set to. With the maximum number of clusters held at 100, here are the results I get:

-L 1 // 1 cluster

-L 2 // 2 clusters

-L 3 // 3 clusters

-L 4 // 5 clusters

-L 5 // 6 clusters

-L 6 // 6 clusters

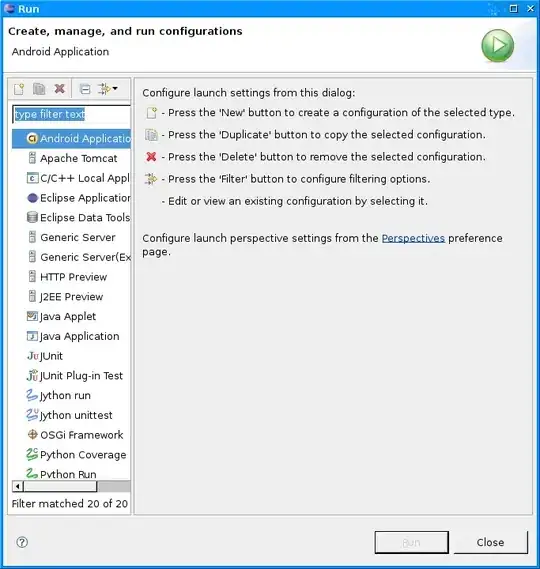

Most egregiously, with -L 1 (and -H 100) only a single cluster is found. Only by getting the minimum cluster count to five do I actually see the six clusters. Cranking the improve-structure parameter way up (to 100,000) does not seem to have any effect. (I've also played with other options without seeing any difference.) Here are the options that generated the above screenshot, which found one center:

private static final String[] XMEANS_OPTIONS = {

"-H", "100", // max number of clusters (default 4)

"-L", "1", // min number of clusters (default 2)

"-I", "100", // max overall iterations (default 1)

"-M", "1000000", // max improve-structure iterations (default 1000)

"-J", "1000000", // max improve-parameter iterations (default 1000)

};

Obviously I'm missing something here. How do I make XMeans behave as expected?