Think about the git repository as a giant graph. Actually there is some

complex math behind it... All files/objects are somehow connected together and git's speed, flexibility and reliability is exactly based on this graph stuff.

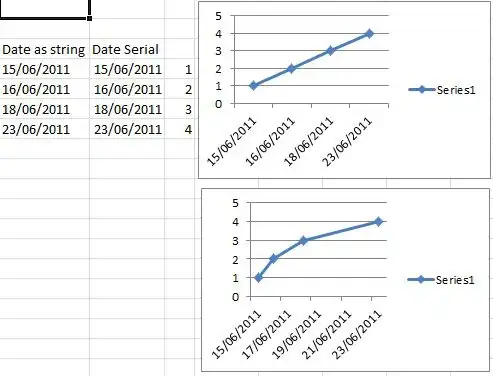

(Picture: http://web.mit.edu/6.005/www/fa15/classes/05-version-control/)

(Picture: http://web.mit.edu/6.005/www/fa15/classes/05-version-control/)

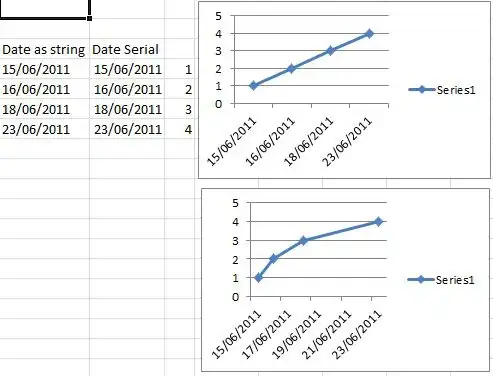

(Picture: http://evadeflow.com/wp-content/uploads/2011/01/git_object_graph_thumb.png)

(Picture: http://evadeflow.com/wp-content/uploads/2011/01/git_object_graph_thumb.png)

If you don't own the whole graph, it is quite probable you won't be able to tell the entire history, states and paths of a file, because you're missing the connections to the origin of this file. What happens, if the subdirectory did not exist in the beginnings of the repository but some files that were later moved into it existed already outside the directory? How would you track them?

Furthermore it is not possible for the (stupid) server to determine

which objects you would need, because git does not use its own server application but http servers and ssh servers. So the remote servers are only capable of delivering files but not in determing which files you would actually need.

The accepted answer in Checkout subdirectories in Git? points exactly this out:

Note that sparse checkouts still require you to download the whole repository, even though some of the files Git downloads won't end up in your working tree.

So, after git has got the whole graph, it can delete all those objects, because (contrary to the server) it can determine whether they are needed or not.

Update: To answer your question: Snapshots are all saved and referenced by hashes so yes, git's inner workings are responsible for this.