I modified some of the code in the time lapse builder class in this link https://github.com/justinlevi/imagesToVideo to take in an array of images instead of url.

Everything seems to be working fine as far as taking the images and creating a video; however, I'm having some issues with the way the images are being displayed in the video.

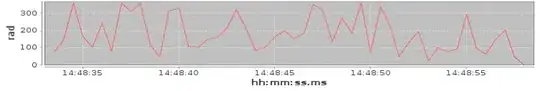

This is how the images really look and under how they look on iphone:

I feel like the issue is in the fillPixelBufferFromImage method:

func fillPixelBufferFromImage(image: UIImage, pixelBuffer: CVPixelBuffer, contentMode:UIViewContentMode){

CVPixelBufferLockBaseAddress(pixelBuffer, 0)

let data = CVPixelBufferGetBaseAddress(pixelBuffer)

let rgbColorSpace = CGColorSpaceCreateDeviceRGB()

let context = CGBitmapContextCreate(data, Int(self.outputSize.width), Int(self.outputSize.height), 8, CVPixelBufferGetBytesPerRow(pixelBuffer), rgbColorSpace, CGImageAlphaInfo.PremultipliedFirst.rawValue)

CGContextClearRect(context, CGRectMake(0, 0, CGFloat(self.outputSize.width), CGFloat(self.outputSize.height)))

let horizontalRatio = CGFloat(self.outputSize.width) / image.size.width

let verticalRatio = CGFloat(self.outputSize.height) / image.size.height

var ratio: CGFloat = 1

// print("horizontal ratio \(horizontalRatio)")

// print("vertical ratio \(verticalRatio)")

// print("ratio \(ratio)")

// print("Image Width - \(image.size.width). Image Height - \(image.size.height)")

switch(contentMode) {

case .ScaleAspectFill:

ratio = max(horizontalRatio, verticalRatio)

case .ScaleAspectFit:

ratio = min(horizontalRatio, verticalRatio)

default:

ratio = min(horizontalRatio, verticalRatio)

}

//print("after ratio \(ratio)")

let newSize:CGSize = CGSizeMake(image.size.width * ratio, image.size.height * ratio)

let x = newSize.width < self.outputSize.width ? (self.outputSize.width - newSize.width) / 2 : 0

let y = newSize.height < self.outputSize.height ? (self.outputSize.height - newSize.height) / 2 : 0

//print("x \(x)")

//print("y \(y)")

CGContextDrawImage(context, CGRectMake(x, y, newSize.width, newSize.height), image.CGImage)

CVPixelBufferUnlockBaseAddress(pixelBuffer, 0)

}

Please advise. Thank you

EDIT: Can someone please help? I'm extremely stuck in getting this images to show in the video as they did when they were taken. Thank you for your help