I am trying to find a relationship between two variables (pv_ratio, battery_ratio) and a third variable 'value'. Both ratios range from 0 to 5 with points every 0.0625 (81x81=6561 points) and 'value' falls within [0,1].

The csv can be found here and looks like that:

battery_ratio pv_ratio value

0 0.0000 0 1

1 0.0625 0 1

2 0.1250 0 1

3 0.1875 0 1

4 0.2500 0 1

5 0.3125 0 1

6 0.3750 0 1

7 0.4375 0 1

8 0.5000 0 1

9 0.5625 0 1

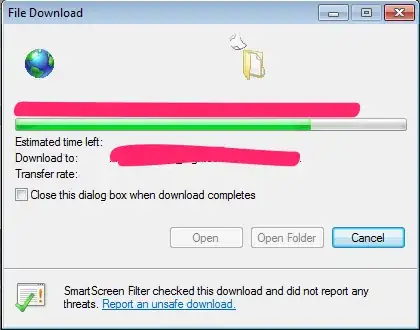

These plots give an idea of the relationships between my variables:

Here is my code to fit my curve, using sicpy.optimize.curve_fit and looking for exponential relationships. This code snippet reads the csv into a pandas df, finds optimal parameters for the f function, plots the results and gives a score to the fit.

I've been working in an iterative manner, trying many formulas for f, improving the score little by little.

from scipy.optimize import curve_fit

import pandas as pd

import numpy as np

import matplotlib.pylab as plt

%matplotlib inline

plt.rcParams['figure.figsize'] = (14.0, 8.0)

def f(X, a, b, c, d, e):

# the function I came up with after some trials, and which I'm trying to improve

bt_r = X[:,0] #battery_ratio

pv_r = X[:,1] #pv_ratio

return (1 - a * np.exp(- e * pv_r ** b)) * np.exp(- (d ** bt_r) * c * pv_r)

def fit():

#find optimal parameters and score fit

X = df[variables].values

y = df.value.values

popt, pcov = curve_fit(f, X, y)

y_real, y_fit = df['value'], f(df[variables].values, *popt)

score = np.round(np.sum(((y_fit - y_real)**2)),1)

return popt, score

def check_fit(values):

#Plot (y_fit, y) for all subsets

def plot_subset(ax, variable, r_value):

"""Scatter plot (y_fit and y) against 'variable' with the other variable set at ratio

- variable : string ['pv_ratio', 'battery_ratio']

- r_value : float

"""

# ratio stands for the second variable which is fixed

ratio = list(set(variables) - set([variable]))[0]

df_ = df.query("{} == {}".format(ratio, r_value))

# plot y and y fit

y_real, y_fit = df_['value'], f(df_[variables].values, *popt)

for y, c in zip([y_real, y_fit], ['b', 'r']):

ax.scatter(df_[variable], y, color=c, s=10, alpha=0.95)

ax.set_title('{} = {}'.format(ratio, r_value))

fig, ax = plt.subplots(nrows=2, ncols=len(values), sharex=True, sharey=True)

for icol, r_value in enumerate(values):

plot_subset(ax[0, icol], 'pv_ratio', r_value)

plot_subset(ax[1, icol], 'battery_ratio', r_value)

fig.tight_layout()

print 'Score: {}'.format(score)

df = pd.read_csv('data.csv', index_col=0)

variables = ['battery_ratio', 'pv_ratio']

popt, score = fit()

check_fit([0,3,5]) #plot y_real and y_fit for these ratios

The code above yields the following picture (blue: real, red: fit) and gives a score to the fit.

The best score (=sum((y_real - y_fit)²/len(y))) I can get is 9.3e-4, which is still not very good in practice, especially on the ramp-up phase.

I'm now stuck at a point where the try-and-repeat process shows its limits. How should I work to design faster and more efficiently my fitting function? Can I get a better score than 6.1?