I am using Elasticsearch - Bonsai in one of my Ruby on Rails Project. So, far things were going very smooth. But, the moment we launched this application to end-users and people started coming-in, we noticed that a single elasticsearch query takes 5-7 seconds to respond (really bad experience for us) -- Though, we have 8-2x Web Dynos in place.

So, we decided to upgrade the Bonsai add-on to Bonsai 10 and also added NewRelic add-on (to keep an eye on how much time a single query takes to respond)

Below are our environment settings:

Ruby: 2.2.4

Rails: 4.2.0

elasticsearch: 1.0.15

elasticsearch-model: 0.1.8

So, we imported the data into Elasticsearch again and here's our ElasticSearch cluster health:

pry(main)> Article.__elasticsearch__.client.cluster.health

=> {"cluster_name"=>"elasticsearch",

"status"=>"green",

"timed_out"=>false,

"number_of_nodes"=>3,

"number_of_data_nodes"=>3,

"active_primary_shards"=>1,

"active_shards"=>2,

"relocating_shards"=>0,

"initializing_shards"=>0,

"unassigned_shards"=>0,

"delayed_unassigned_shards"=>0,

"number_of_pending_tasks"=>0,

"number_of_in_flight_fetch"=>0}

and below is NewRelic's data of ES calls

Which indicates a big reason to worry.

My model article.rb below:

class Article < ActiveRecord::Base

include Elasticsearch::Model

after_commit on: [:create] do

begin

__elasticsearch__.index_document

rescue Exception => ex

logger.error "ElasticSearch after_commit error on create: #{ex.message}"

end

end

after_commit on: [:update] do

begin

Elasticsearch::Model.client.exists?(index: 'articles', type: 'article', id: self.id) ? __elasticsearch__.update_document : __elasticsearch__.index_document

rescue Exception => ex

logger.error "ElasticSearch after_commit error on update: #{ex.message}"

end

end

after_commit on: [:destroy] do

begin

__elasticsearch__.delete_document

rescue Exception => ex

logger.error "ElasticSearch after_commit error on delete: #{ex.message}"

end

end

def as_indexed_json(options={})

as_json({

only: [ :id, :article_number, :user_id, :article_type, :comments, :posts, :replies, :status, :fb_share, :google_share, :author, :contributor_id, :created_at, :updated_at ],

include: {

posts: { only: [ :id, :article_id, :post ] },

}

})

end

end

Now, if I look at the BONSAI 10 plan of Heroku, it gives me 20 Shards but with current status of cluster, it is using only 1 active primary shards and 2 active shards. Few questions suddenly came into my mind:

- Does increasing the number of shards to 20 will help here?

- It can be possible to cache the ES queries -- Do you also suggest the same? -- Does it has any Pros and Cons?

Please help me in finding the ways by which I can reduce the time and make ES work more efficient.

UPDATE

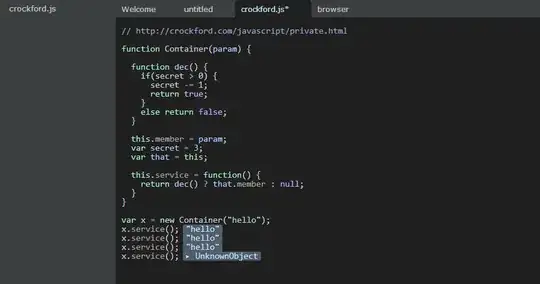

Here's the small code snippet https://jsfiddle.net/puneetpandey/wpbohqrh/2/, I had created (as a reference) to show exactly why I need so much calls to ElasticSearch

In the example above, I am showing few counts (in front of each checkbox element). To show those counts, I need to fetch numbers which I am getting by hitting ES

Ok, so after reading the comments and found a good article here: How to config elasticsearch cluster on one server to get the best performace on search I think I've got enough to re-structure upon

Best,

Puneet