It's not the best, surprisingly to me it's not even particularily good. The main problem is the bad distribution, which is not really the fault of boost::hash_combine in itself, but in conjunction with a badly distributing hash like std::hash which is most commonly implemented with the identity function.

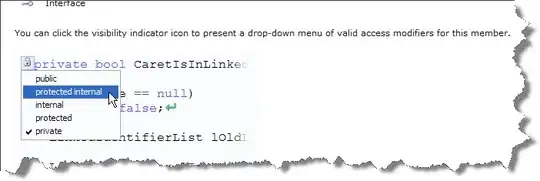

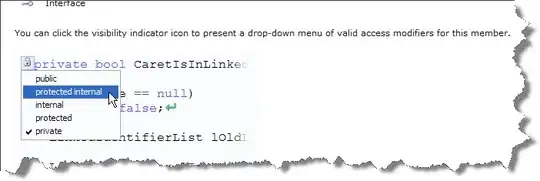

Figure 2: The effect of a single bit change in one of two random 32 bit numbers on the result of boost::hash_combine . On the x-axis are the input bits (two times 32, first the new hash then the old seed), on the y-axis are the output bits. The color indicate the degree of dependence.

Figure 2: The effect of a single bit change in one of two random 32 bit numbers on the result of boost::hash_combine . On the x-axis are the input bits (two times 32, first the new hash then the old seed), on the y-axis are the output bits. The color indicate the degree of dependence.

To demonstrate how bad things can become these are the collisions for points (x,y) on a 32x32 grid when using hash_combine as intended, and with std::hash:

# hash_combine(hash_combine(0,x₀),y₀)=hash_combine(hash_combine(0,x₁),y₁)

# hash x₀ y₀ x₁ y₁

3449074105 6 30 8 15

3449074104 6 31 8 16

3449074107 6 28 8 17

3449074106 6 29 8 18

3449074109 6 26 8 19

3449074108 6 27 8 20

3449074111 6 24 8 21

3449074110 6 25 8 22

For a well distributed hash there should be none, statistically. One could make a hash_combine that cascades more (for example by using multiple more spread out xor-shifts) and preserves the entropy better (for example using bit-rotations instead of bit-shifts). But really what you should do is use a good hash function in the first place, then after that a simple xor is sufficient to combine the seed and the hash, if the hash encodes the position in the sequence. For ease of implementation the following hash does not encode the position. To make hash_combine non commutative any non-commutative and bijective operation is sufficient. I chose an asymmetric binary rotation because it is cheap.

#include <limits>

#include <cstdint>

template<typename T>

T xorshift(const T& n,int i){

return n^(n>>i);

}

// a hash function with another name as to not confuse with std::hash

uint32_t distribute(const uint32_t& n){

uint32_t p = 0x55555555ul; // pattern of alternating 0 and 1

uint32_t c = 3423571495ul; // random uneven integer constant;

return c*xorshift(p*xorshift(n,16),16);

}

// a hash function with another name as to not confuse with std::hash

uint64_t distribute(const uint64_t& n){

uint64_t p = 0x5555555555555555ull; // pattern of alternating 0 and 1

uint64_t c = 17316035218449499591ull;// random uneven integer constant;

return c*xorshift(p*xorshift(n,32),32);

}

// if c++20 rotl is not available:

template <typename T,typename S>

typename std::enable_if<std::is_unsigned<T>::value,T>::type

constexpr rotl(const T n, const S i){

const T m = (std::numeric_limits<T>::digits-1);

const T c = i&m;

return (n<<c)|(n>>((T(0)-c)&m)); // this is usually recognized by the compiler to mean rotation, also c++20 now gives us rotl directly

}

// call this function with the old seed and the new key to be hashed and combined into the new seed value, respectively the final hash

template <class T>

inline size_t hash_combine(std::size_t& seed, const T& v)

{

return rotl(seed,std::numeric_limits<size_t>::digits/3) ^ distribute(std::hash<T>{}(v));

}

The seed is rotated once before combining it to make the order in which the hash was computed relevant.

The hash_combine from boost needs two operations less, and more importantly no multiplications, in fact it's about 5x faster, but at about 2 cyles per hash on my machine the proposed solution is still very fast and pays off quickly when used for a hash table. There are 118 collisions on a 1024x1024 grid (vs. 982017 for boosts hash_combine + std::hash), about as many as expected for a well distributed hash function and that is all we can ask for.

Now even when used in conjunction with a good hash function boost::hash_combine is not ideal. If all entropy is in the seed at some point some of it will get lost. There are 2948667289 distinct results of boost::hash_combine(x,0), but there should be 4294967296 .

In conclusion, they tried to create a hash function that does both, combining and cascading, and fast, but ended up with something that does both just good enough to not be recognised as bad immediately. But fast it is.